Mini Review - (2022) Volume 14, Issue 8

A overview of the use of multi-modality fusion and deep learning for medical picture segmentation

Sadia Stefano*

Department of Internal Medicine and Allergology, Cantonal Hospital, Italian Hospital, Lugano, Switzerland

*Correspondence:

Sadia Stefano, Department of Internal Medicine and Allergology, Cantonal Hospital, Italian Hospital, Lugano,

Switzerland,

Email:

Received: 01-Aug-2022, Manuscript No. ipaom-22-13192;

Editor assigned: 03-Aug-2022, Pre QC No. P-13192;

Reviewed: 08-Aug-2022, QC No. Q-13192;

Revised: 13-Aug-2022, Manuscript No. R-13192;

Published:

18-Aug-2022

Abstract

Due to its ability to provide multiple information about a target

(tumor, organ, or tissue), multi-modality is frequently utilized

in medical imaging. In order to improve the segmentation,

multimodality segmentation involves fusing multiple information

sources. In recent times, approaches based on deep learning

have demonstrated cutting-edge results in image classification,

segmentation, object detection, and tracking tasks. Multi-modal

medical image segmentation has recently also piqued the interest

of deep learning researchers due to their capacity for self-learning

and generalization across large amounts of data. We present an

overview of deep learning-based methods for the multi-modal

medical image segmentation task in this paper. Multi-modal medical

image segmentation and the general principle of deep learning are

first discussed. Second, we compare and contrast the outcomes of

various fusion strategies and deep learning network architectures.

Because it is straightforward and focuses on the subsequent

segmentation network architecture, the earlier fusion is frequently

utilized. However, the later fusion places a greater emphasis on the

fusion strategy in order to learn the intricate connection that exists

between the various modalities. If the fusion technique is effective

enough, later fusion can generally yield more accurate results than

earlier fusion. Additionally, we talk about some typical issues with

medical image segmentation. In conclusion, we offer a synopsis and

some perspectives on the upcoming research.

Keywords

Deep learning, Medical image segmentation, Multimodality

fusion

INTRODUCTION

With the development of medical image acquisition

systems, multi-modality segmentation has been the subject

of extensive research. Probability theory, fuzzy concepts

believe functions, and machine learning is two examples of

successful image fusion strategies. For the techniques in view

of the likelihood hypothesis and AI, various information

modalities have different measurable properties which

make it hard to display those utilizing shallow models.

The fuzzy measure measures the degree of membership in

relation to a decision for each source in the methods based

on the fuzzy concept. The combination of a few sources is

accomplished by applying the fluffy administrators to the

fluffy sets. Each source is first modeled by an evidential

mass in the belief function theory methods, and then

the DempsterShafer rule is used to fuse all sources. The

selection of the evidential mass, the fuzzy measure, and

the fuzzy conjunction function is the primary obstacle to

utilizing the belief function theory and the fuzzy set theory.

However, the mapping can be directly encoded by a deep

learning-based network. As a result, the deep learningbased

approach has a great chance of achieving superior

fusion results to those of conventional approaches.

Description

A number of deep convolutional neural network models,

including AlexNet, ZFNet, VGG, GoogleNet, Residual

Net, DenseNet, FCN, and U-Net, have been proposed since

2012.In addition to offering cutting-edge performance for

image classification, segmentation, object detection, and

tracking, these models offer a novel perspective on image

fusion. Their success can be attributed primarily to the

following four factors: First, advances in neural networks

are the primary factor in deep learning's remarkable

success over traditional machine learning models. Deep

learning learns high-level features incrementally from

data, eliminating the need for domain expertise and hard

feature extraction. Additionally, it provides comprehensive

solution to the issue. Second, the model can be trained 10

to 30 times faster on GPUs than on CPUs thanks to the

development of GPU-computing libraries. Additionally,

GPU implementations are provided by open source

software packages. Thirdly, researchers can train and test

new versions of deep learning models by using publicly

accessible datasets like ImageNet. Finally, the final success

of deep learning can be attributed to a number of efficient

optimization techniques, including dropout, batch

normalization, Adam optimizer, and others. We can also update the weights and get the best performance using the

ReLU activation function and its variants [1].

Medical image researchers have also attempted to apply

deep learning-based approaches to medical image

segmentation in the brain, pancreas, prostate, and multiorgan.

This was motivated by the success of deep learning.

For diagnosis, monitoring, and treatment, medical image

segmentation is a crucial component of medical image

analysis. The objective is to label each image pixel, which

typically involves two phases: first, finding the unhealthy

tissue or areas of interest; Second, narrow down the

various anatomical structures or areas of interest. In the

medical image segmentation task, these deep learningbased

methods have outperformed conventional methods.

Utilizing multi-modal medical images has become a

growing trend strategy for better segmentation and

diagnosis. On July 17, 2019, a query was made for a

comprehensive literature review using the keywords "deep

learning," "medical image segmentation," and "multi

modality."We can see that the quantity of papers builds

consistently from 2014 to 2018, and that implies multimodular

clinical picture division in profound learning are

acquiring increasingly more consideration as of late [2,3].

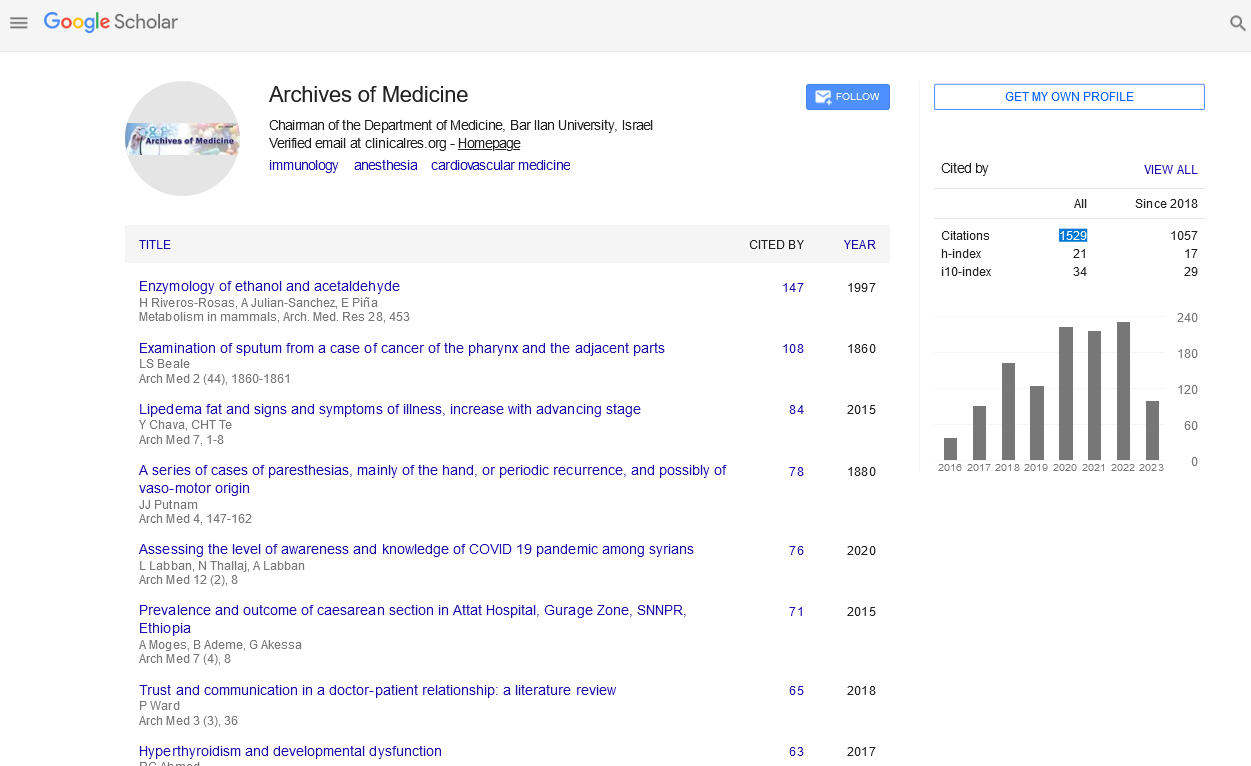

We compare the scientific output of the image

segmentation community, the medical image segmentation

community, and the medical image segmentation using

multi-modality fusion with and without deep learning

to better comprehend the scope of this research field. As

can be seen from the graph, the number of papers using

methods other than deep learning is decreasing or even

trending downward, whereas the number of papers using

deep learning methods is rising across all research fields.

Due to the limited datasets, classical methods still hold the

upper hand, especially in the medial image segmentation

field; however, we can clearly see an increasing tendency

toward methods that employ deep learning. Medical

image analysis uses computed tomography (CT), positron

emission tomography (PET), and magnetic resonance

imaging (MRI).Multi-modal images, in comparison to

single images, aid in the better representation of data and

the network's discriminative power by bringing together

information from multiple perspectives and enabling the

extraction of features from those perspectives [4-6].

The MR image can provide a good soft tissue contrast

without the use of radiation, whereas the CT image can

diagnose muscle and bone disorders like bone tumors and

fractures. While functional images like PET can provide

quantitative metabolic and functional information about

diseases, they do not have anatomical characterization.

X-ray methodology can give reciprocal data because of its

reliance on factor procurement boundaries, for example, T1-

weighted (T1), contrast-upgraded T1-weighted (T1c), T2-

weighted (T2) and Liquid weakening reversal recuperation

(Energy) pictures.T2 and Flair can detect a tumor with

peritumoral edema, whereas T1 and T1c can only detect

the tumor core without it. As a result, using multi-modal

images can make clinical diagnosis and segmentation more

accurate and reduce information uncertainty. In, we talk

about a few popular multimodal medical images. The

earlier fusion is straightforward, and the majority of works

employ it for segmentation. It focuses on the subsequent

designs of complex segmentation network architectures but

does not take into account the relationship between various

modalities and does not investigate how to fuse various

feature information to enhance segmentation performance.

However, because each modality is used as an input to a

single network that is capable of learning complex and

complementary feature information from each modality,

the later fusion pays more attention to the fusion problem.

If the fusion method is effective enough, the later fusion

can generally achieve better segmentation performance

than the earlier fusion. Furthermore, the choice of fusion

technique is dependent on the problem at hand [7-10].

Conclusion

Deep learning-based medical image analysis is also the

subject of a few additional reviews. However, the fusion

strategy is not their primary focus. Examples include

Litjens et al. reviewed the most important ideas about

deep learning in medical image analysis. Bernal and co.

gave an overview of deep CNN for MRI analysis of the

brain. For the purpose of medical image segmentation,

we concentrate on fusion techniques for multi-modal

medical images in this paper. The remainder of the paper

is organized as followed. Multi-modal medical image

segmentation and the general principle of deep learning

are discussed in Section 2.We explain how to prepare the

data before feeding it to the network in Section 3.The

comprehensive multi-modal segmentation network based

on various fusion strategies is discussed in depth in Section

4.We talk about some common issues that have arisen in

the field in Section 5.In conclusion, we provide a synopsis

and talk about the outlook for the future in the field of

multi-modal medical image segmentation.

REFERENCES

- Whitcher B, Tuch DS, Wisco JJ et al. Using the wild bootstrap to quantify uncertainty in diffusion tensor imaging. Hum Brain Mapp. 2008;(3):346-362.

Google Scholar, Crossref, Indexed at

- Unger J, Hebisch C, Phipps JE et al. Real-time diagnosis and visualization of tumor margins in excised breast specimens using fluorescence lifetime imaging and machine learning. Biomed Opt Express. 2020;11(3):1216-1230.

Google Scholar, Crossref, Indexed at

- Sacha D, Senaratne H, Kwon BC et al. The role of uncertainty, awareness, and trust in visual analytics. IEEE Trans Vis Comput Graph. 2015;22(1):240-249.

Google Scholar, Crossref, Indexed at

- Schlachter M, Raidou RG, Muren LP et al. State‐of‐the‐art report: Visual computing in radiation therapy planning. Comput Graph. 2019;38(3):753-779.

Google Scholar, Crossref

- Schiemann T, Freudenberg J, Pflesser B et al. Exploring the visible human using the voxel-man framework. Comput Med Imaging Graph. 2000;24(3):127-132.

Google Scholar, Crossref, Indexed at

- Egmentation S, Pham DL, Xu C et al. Current methods in medical image segmentation. Ann Rev Biomed Eng.2000;2(1):315-37.

Crossref, Indexed at

- Nair T, Precup D, Arnold DL et al. Exploring uncertainty measures in deep networks for multiple sclerosis lesion detection and segmentation. Med Image Anal. 2020;59:101557.

Google Scholar, Crossref, Indexed at

- Maleike D, Unkelbach J, Oelfke U. Simulation and visualization of dose uncertainties due to interfractional organ motion. Phys Med.Biol. 2006;51(9):2237.

Google Scholar, Crossref, Indexed at

- Lundström C, Ljung P, Persson A et al. Uncertainty visualization in medical volume rendering using probabilistic animation. IEEE Trans Vis Comput Graph. 2007;13(6):1648-1655.

Google Scholar, Crossref, Indexed at

- Le Folgoc L, Delingette H, Criminisi A et al. Quantifying registration uncertainty with sparse bayesian modelling. IEEE Trans Med Image. 2016;36(2):607-617.

Google Scholar, Crossref, Indexed at