Key words

Curriculum evaluation, education, evaluation, evaluation models, nursing, nursing education

Introduction

Evaluation has been defined by Oerman and Gaberson [1] as “a process of making judgements about student learning and achievement, clinical performance, employee competence, and educational programs, based on assessment data”. Keating [2] defined evaluation as “a process by which information about an entity is gathered to determine its worth” and involves making “value judgements about learners, as value is part of the word evaluation”. Evaluation is used in various professional contexts on a daily basis in order to make decisions for complex matters that require individuals or methods of practice to be either certified, secured or improved. With regard to the educational context, many of the terms, concepts, and theories of educational evaluation originated from business models, and have been adapted to education, especially in light of an increased emphasis on outcomes.

A variety of evaluation approaches have been developed throughout the relatively short but plentiful life of evaluation. Evaluation in education has received both criticism and approval from the scientific community. Many authors expressed their scepticism about the application of evaluation in education, and have discussed the difficulties of implementing evaluation theory in practice. [3-7] From the early years of evaluation, programme evaluation was considered as a problematic issue for several reasons. The impracticality of evaluation instruments, the lack of students’ involvement in the evaluation process, the low response rate and poor commitment of faculty staff are some of the issues that have thrown doubt on the practicality of programme evaluation. In the past, programme evaluation was characterised as a time-consuming, monotonous procedure, with doubtful results and struggling processes. [8,9,10] Others considered evaluation as a necessary but complex component of curriculum design, development and implementation. [11] Traditionally, the complexity of evaluation was highlighted and, for this reason, evaluation was the least understood and the most neglected element of curriculum design and development. [12] In the same context, however, programme evaluation was considered as an important element of programme development, despite being neglected due to its complex nature and the increased problems for policy makers and programme planners. [13]

Different views were presented in the past by various authors who revealed the constructive nature of evaluation and claimed that evaluation is a vital component of programme development. Rolfe [10] for example, who expressed concerns about the practicality of educational evaluation, also emphasised that evaluation is an important element of curriculum development and implementation. O’Neill [8] stressed that evaluation is one of the most significant facets of curriculum development, even if it is carried out solely for the purpose of providing the faculty with a sense of security. In addition, Shapiro [14] and Grant-Haworth and Conrad, [15] associate the notion of quality with evaluation and consider evaluation as a prerequisite for developing and sustaining high–quality educational programmes. The authors underscored that programme quality and programme evaluation have been strongly emphasised in higher education, despite the fact that evaluators and educators often conveyed criticism and divergent opinions.

Historically, these contrasting views highlight the value of educational evaluation as well as its complexity and impracticality. These can be the reasons for poor and unsuccessful implementation of evaluation in practice. Despite, however, the opposing views on the utilisation and usefulness of programme evaluation, there is a general agreement among authors of the earlier and later times that evaluation is an essential part of the educational process. [4,5,7,16,-19] Perhaps this is the reason that successive attempts have been made throughout the 20th century to evaluate educational programmes and curricula. These attempts will be reviewed in chronological order.

Aim of the study

The aim of the present paper was to review the history of evaluation in nursing education, and to highlight its contribution to modern evaluation thinking.

Methodology

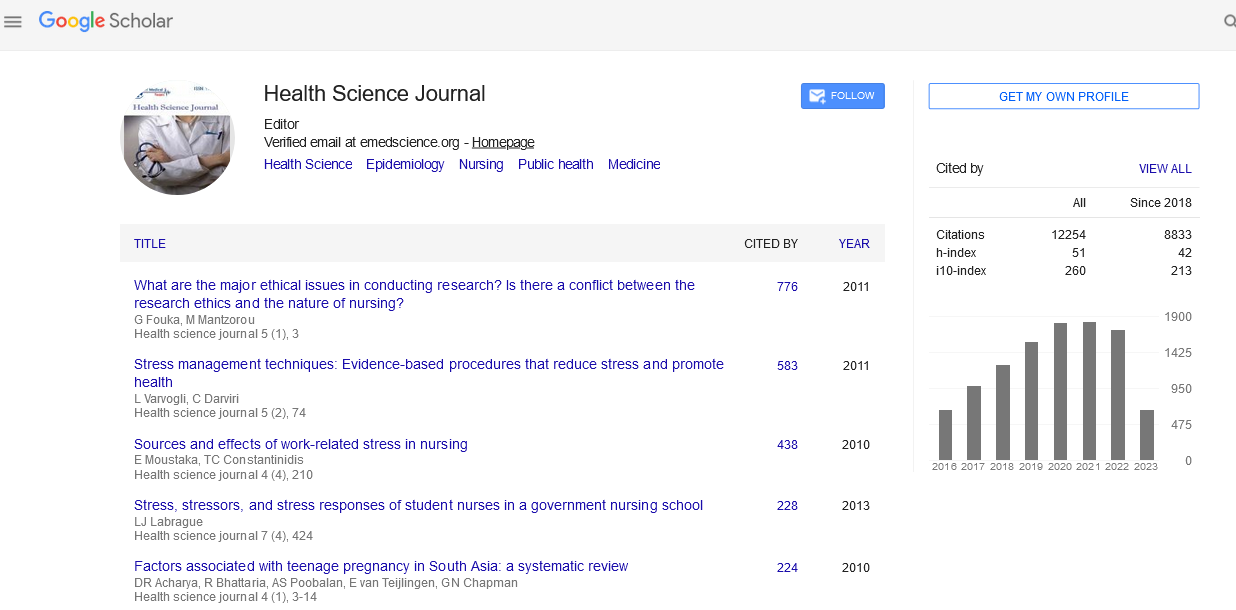

A literature search of the electronic databases ProQuest, GoogleScholar, CINHAL+ and PubMed was conducted. The literature search was not limited to certain years, since the purpose of the paper was to present a thorough historical review of evaluation in education. Literature is reviewed from nursing, education, and evaluation disciplines. The benchmarking of texts on evaluation and education formed the skeleton of the paper. Articles and documents that described evaluation theories and methods in education, evaluation approaches in nursing education and development, and the implementation of educational evaluation models in practice were included in the review. Key – words used in the literature search were: evaluation, education, curriculum evaluation, evaluation models, nursing, nursing education.

Results

An early attempt at curriculum evaluation was made by Tyler [20] in a longitudinal study of schools in the 1930s. As Whiteley [21] states, Tyler provided an exemplary effort of evaluation practice by developing the so-called “traditional” or “orthodox” approach. Tyler’s point of view has come to be known as objectives-oriented (or objectives-referenced) evaluation. His evaluation focused upon curriculum development, the development of objectives and their concomitant evaluation. The behavioural approach, which he used, was characterised as being mechanistic, despite any benefit of its inherent measurability. [22] The strong behaviourist emphasis in use at that time, and the utilisation of psychometric tests, has been viewed with some reserve by later authors. [10,12,23]

Tyler’s work was far-reaching, and affected the work of many future evaluation theorists. A number of later theoretical works rest heavily on Tyler’s views of evaluation, emphasising particularly the methodology as objectives-based measurement. During the 1960s and 1970s, however, researchers began developing new evaluation models that went far beyond Tyler’s original concepts of evaluation.

The first full-scale description of the application of research methods in evaluation was a work by the sociologist Edward Suchman, [24] who wrote a book entitled “Evaluative Research”. Suchman [24] distinguishes between evaluation as a commonsense usage, referring to the “social process of making judgments of worth” and evaluative research that uses scientific research methods and techniques.

In 1967, Scriven [25] brought in the concept of “formative” and “summative” evaluation as a way of distinguishing the two kinds of roles evaluators play: they can assess the merits of a programme while it is still under development, or they can assess the outcomes of an already completed programme. [26] Formative evaluation judges students’ progress in meeting the objectives and developing competencies for practice, while summative evaluation judges the quality of students’ achievement during the course.

At the same time, Stake [27] developed a model that embraces three facets: antecedents, transactions, and outcomes. This is called the “Countenance Model”. Antecedents refer to conditions related to individuals’ ability and willingness to learn. These conditions exist in the individuals before the training occurs. Transactions are related to teaching methods, examinations or tests, and represent all the processes involved in the training. Outcomes refer to factors such as ability and achievements, which are the product of antecedents and transactions. Stake [27] relates the information obtained to judgement and description, and links these together with contingency and congruence, since finding the contingencies among antecedents, transactions and outcomes, and revealing the congruence between intents and observations, are the two principal ways of processing the data. Stake [28] also developed the “responsive evaluation” model and emphasised that the evaluators should attend actual programme activities, use whatever data-gathering schemes seem appropriate, and to respond to the audience’s needs for information.

Stufflebeam [29] recognised the need for evaluation to be more holistic in its approach and developed a model that focused on the decision-making process used for the development and implementation of the curriculum. The model’s first installment was published more than 35 years ago and the evaluation process focused on one of the four categories: Context-Input-Process-Product. Thus this model was labelled as the CIPP model. The CIPP model has also been used for accountability purposes as it represents a rationale for assisting educators to be accountable for the decisions that they have made in the course of implementing a programme. Context evaluation refers to the nature of the curriculum, input evaluation focus on the resources required to accomplish the objectives of the curriculum, and process evaluation concerns the link between theory and practice and the implementation of the curriculum. Finally, product evaluation refers to the end-result, the extent to which the curriculum objectives have been met. [22] In general, these four parts of an evaluation respectively ask: What needs to be done? How should it be done? Is it being done? Did it succeed? Stufflebeam [30] also reconciled his model with Scriven’s formative and summative evaluation by stating that formative evaluation focuses on decision making and summative evaluation on accountability. [26] Stufflebeam [31] has been involved in both formative metaevaluation and summative metaevaluation. Metaevaluation (evaluation of an evaluation) is to be done throughout the evaluation process; evaluators also should encourage and cooperate with independent assessments of their work. Stufflebeam believed that metaevaluation should be a form of communication and a technical, data-gathering process.

The CIPP model has been useful in guiding educators in programme planning, operation and review, as well as programme improvement. [32] On the other hand, it was criticised for the difficulty of measuring and recording context and input. [21] In addition, the model became difficult to work due to the decision-making process required to put the model into practice, and the inability of participants to evaluate their own actions. [21]

The work of Weiss [33] led to the development of an approach known today as theory-based evaluation, theory-driven evaluation, or programme theory evaluation (PTE). This approach to evaluation focuses on theoretical rather than methodological issues. The major focusing questions here are: “How is the programme supposed to work? What are the assumptions underlying the programme’s development and implementation?” The model, often called a logic model, is typically developed by the evaluator in collaboration with the programme developers, either before the evaluation takes place or afterwards. Evaluators then collect evidence to test the validity of the model. [26] The contribution of PTE is that it forces evaluators to move beyond treating the programme as a black box and leads them to examining why observed changes arising from a programme occurred.

In line with the new philosophy of person-centred evaluation, influenced by the theories of Rogers, [34] Friere, [35] Gange [36] and Knowles, [37] Parlett and Hamilton [23] developed the “Illuminative Evaluation” model. In this model, evaluation takes place by using observations and interviews with those involved in the curriculum. The problems and the specific features of the programme can be illuminated by using observations, interviews, questionnaires, analysis of documents and background information. [23] Most of the data produced by using this method are of a qualitative nature. This model emerged from a series of innovations that were initiated by the “new-wave” evaluation researchers. According to them, evaluators should aim to produce responsive and flexible work that uses qualitative data, understandable to those for whom it is meant. It was also emphasised that value positions of the evaluator should be clarified so that any bias in the interpretation is apparent. [21]

An alternative title for the “Illuminative Evaluation” model is “the anthropological model”. Τhis model provided a qualitative perspective of the evaluation of educational programmes and a much wider point of view of the whole programme as opposed to the measurement of behaviour. This evaluation method was criticised in terms of validity of results, including researcher bias and self-judgement. [21] In particular, most criticism was related to the model’s potential for subjectivity, since evaluation appears too much dependent upon the interests and values of the observer. This may be a particular problem where the evaluator is also the course tutor, since role conflict is likely to occur. Despite the criticism to which this model has been subjected, the use of qualitative methods for gaining insight into the educational process and the involvement of the participants are concepts that are highly appraised in applying meaningful evaluations in real life contexts from contemporary evaluators such as Patton. [38] Patton [39] presented the utilisation-focused evaluation model and he addressed the concern that evaluation findings are often ignored by decision makers. He probed evaluation programme sponsors to attempt to understand why this is so and how the situation could be improved. Patton maintains that the evaluator must seek out individuals who are likely to be real users of the evaluation.

In the same context, Stenhouse [40] proposed a model that involves the teacher as both curriculum developer and evaluator. This model is called “the teacher as researcher” model and it is based on the notion that within education it is common practice for the teacher of a programme to also carry out the evaluation, or part of it. Stenhouse [40] saw a curriculum as both what a school (or teacher) intends to do, and what it actually does, composed of three broad domains: content, skills, knowledge. He argued that there was a frustrating gap between intent and delivery. To bridge this gap, Stenhouse suggested “the teacher as researcher” model. He called for teachers to become researchers and research their own teaching either alone or in a group of co-operating colleagues. He argued for an evolving style of co-operative research by teachers and full-time researchers to support the teachers in testing out theories and ideas in their classroom. A criticism of this model relates to its subjectivity and potential for role conflict, since the teacher is also the evaluator. This attribute involves also the concept of self-evaluation as a part of the evaluation process.

Another approach is Eisner’s [41] “connoisseurship” model, which is rooted in the field of art criticism. Eisner [41] first presented his views on what he called “educational connoisseurship” and has subsequently expanded on those views. [42,43] From his prior experience as curriculum expert and as an artist, Eisner views education work as an expression of artistry that allows us to look beyond the technical and develop more creative and appropriate responses to the situations that educators and learners encounter. Two concepts are key to Eisner’s model: educational connoisseurship and educational criticism. Eisner [42] describes connoisseurship and criticism as follows:

“If connoisseurship is the art of appreciation, criticism is the art of disclosure. Criticism, as Dewey pointed out in Art as Experience, has at its end the re-education of perception... The task of the critic is to help us to see. Thus… connoisseurship provides criticism with its subject matter. Connoisseurship is private, but criticism is public. Connoisseurs simply need to appreciate what they encounter. Critics, however, must render these qualities vivid by the artful use of critical disclosure” (pages 92-93).

Additional evaluation approaches focused on “Goal – Free” evaluation and “Goal – Based” evaluation. These definitions were generated by Scriven, [44] stating that pre-determined objectives might impede full access to information about the educational programme. Such efforts value the use of qualitative approaches to evaluation, free conceptualisation and measurement of needs data of the curriculum users. If we look at the definition of the Goal-Free evaluation, it is obvious that issues of creativity, freedom in evaluation planning and caution to hidden aspects of the curriculum are highly praised, maybe for the first time in evaluation activity:

“in this type of evaluation the evaluator is not told the purpose of the programme but enters into the evaluation with the purpose of finding out what the programme actually is doing without being cued as to what it is trying to do. If the programme is achieving its stated goals and objectives, then these achievements should show up [in observation of process and interviews with consumers (no staff)] ; if not, it is argued, they are irrelevant” (page 68) . [44]

In this type of evaluation, the needs of the people to whom the programme is addressed are collected and analysed. Then the evaluation programme information that is collected by the evaluator is compared with these needs. However, the model has been criticised as not really addressing the issue of needs assessment in concrete and practical terms, and thus it is better to be used as a parallel activity to goal-based evaluation. [45]

Another approach to evaluation is the “case study” model by Kenworthy and Nicklin, [12] in which “a wide range of evaluation techniques are used in order to obtain as complete an account as possible of the whole programme or programme unit” (page 127). In this model both qualitative and quantitative methods are used, and methods of data collection involve interviews, observations and questionnaires. The disadvantage of this model is the use of external evaluators that result in significant consequences in terms of monetary cost.

The notion of process evaluation has been also discussed in the context of educational evaluation. Patton [38] stressed that process evaluation is particularly appropriate for disseminating a model intervention where a programme has served as a demonstration project or is considered to be a model worthy for replication. Patton [38] also suggested that process evaluation requires a detailed description of programme operation and an analysis of the introduction of the educational programme. The work of Patton [38] favours naturalistic approaches in evaluation and strives for the pragmatic orientation of qualitative inquiry and for flexibility in evaluation, rather than the imposition of predetermined models.

In 1997, the “Emergent Realists”, Pawson and Tilley, [46] introduced the notion of realism in evaluation and declared that realism can serve evaluation by offering an alternative to the two contradicting paradigms (positivism and naturalism); a new paradigm compatible with the pragmatic realism, which is implicit in the work of most evaluators.

The realist approach incorporates the realist notion of a stratified reality with real underlying generative mechanisms. Using these core realist ideas and others, Pawson and Tilley [46] build their realistic evaluation around the notion of context-mechanism-outcome (CMO) pattern configurations. In their view, the central task in realistic evaluation is the identification and testing of CMO configurations. This involves deciding what mechanisms work for whom and under what circumstances. Further to this, Julnes et al., [47] stress the necessity to further develop and promote a realism-based view of evaluation. They refer to this as “elaboration” of realistic evaluation, which requires moving on from a general model of explanation to a more general model of knowledge construction that includes explanation. The authors develop their argument by challenging the two types of knowledge - one about structure and one about mechanisms - which derive from the realists’ notion on the knowledge of phenomena. They say that the identification of underlying mechanisms is only one part of the story and suggest three additional aspects of knowledge development: classification, programme monitoring and values inquiry. In this discussion, the inability of evaluators to appreciate classification, systematic monitoring and stakeholders’ values in educational evaluation are highlighted. Specific attention was granted to peoples’ values and values inquiry as a major facet in programme evaluation. [47] The importance of stakeholders’ involvement in evaluation was also stressed, and House [48] stated that there is general agreement that the values and interests of important stakeholders can and should be included in evaluations to enable the evaluator to make syntheses and move beyond formal theories.

One the best known evaluation methodologies for judging learning processes is Kirkpatrick's Four Level Evaluation Model, which was first published in a series of articles in 1959. [49] However, it was not until more recently that he provided a detailed elaboration of its features and the four levels became popular. Kirkpatrick’s evaluation model is less known in educational evaluation circles because it focuses on training events. The four steps of evaluation consist of: a) Reaction and personal reflection from participants, i.e. on satisfaction, effect and utility of the training programme, b) Learning - growth of knowledge, learning achievements, c) Behaviour - changes in behaviour, transfer of competencies into concrete actions/situations, and d) Results – long-term lasting transfer, also in organisational and institutional terms. [49]

In addition to using conceptual models for programme and curriculum evaluation, some institutions choose to use process models in order to “conduct” the educational process, starting from the needs and moving towards the expected achievements.

Building on the first three steps of the Centers for Disease Control and Prevention, Zimmerman and Holden [50] developed a five-step model called Evaluation Planning Incorporating Context (EPIC), which provides a plan for addressing issues in the preimplementation phase of programme evaluation. The first step, assessing context, involves the importance of the evaluator gaining a thorough understanding of the unique environment and people involved in the programme and how they may influence critical information about the programme. The second step, gathering reconnaissance, involves understanding and getting to know all of the people engaged in the evaluation plan. In the third step of the EPIC model, the evaluator identifies potential stakeholders to engage in the evaluation. The fourth step of the EPIC model, describing the programme, involves learning about all facets of the programme, and identifying the underlying concepts behind the programme’s goals and objectives. In the final step of the model, focus the evaluation, the evaluator leads a process to finalise the evaluation plan. The model is especially helpful in providing guidelines for conducting an evaluation. [2]

This dynamic evaluative activity resulted in a changing era in the field of evaluation. The ongoing debate in the field of evaluation in education generated different concepts in evaluation science and wider perspectives. Scientists recognised the problems associated with behaviouristic and mechanistic approaches to evaluation and considered educational evaluation through a multidisciplinary point of view, taking into account experience and expertise from various disciplines. A shift from the behaviouristic approach was initiated by developing numerous models of evaluation that recognise that there was more to a programme than the resultant effects that it had on participants. The issues of context and content were praised for their value in judging programme’s effectiveness. The use of qualitative modes of inquiry was introduced, removing the emphasis from outcome measurement to process, structure and context. A significant experience gained throughout the historical developments in the field of evaluation was that evaluation models seemed to gain appraisal as well as criticism by educators and evaluators. The benefits and shortcomings that were raised from applying evaluation in practice, helped modern scientists to experiment with new flexible evaluation approaches.

Discussion

The above brief review of evaluation models has further highlighted the two contradictory attributes of educational evaluation that were described earlier: its usefulness in education and its complexity in implementation.

Many of the emergent models have been complex and difficult to apply in a real life setting. Stake’s model proved too complex to put into practice. Stufflebeam’s model appeared unpopular and difficult in measuring and recording the context and the input. Scriven’s goal-free evaluation seemed not to address the issue of needs assessment of the target population, and the “Illuminative Evaluation” model of Parlett and Hamilton was criticised for the validity of its results, subjectivity and researcher bias. In the same way, the “teacher as researcher” model gave rise to concern about the role of teacher-evaluator and appeared difficult to apply in real settings. [21,22,45]

Evaluation scientists were sceptical about the evaluator’s role in certain models and discussed the issues of researcher bias, self-judgement, subjectivity and role-conflict. Specific qualities and preparation were required for the educators, especially when they were engaged in the role of evaluator as some of the evaluation models proposed. On the other hand, cost was an inhibiting factor for approaches based on external evaluation. Finally, some of the models appeared to work more effectively when used in parallel or in a complementary way with others. This led evaluation researchers to focus on new concepts and approaches, which would facilitate the application of evaluation in practice. Kenworthy and Nicklin, [12] Patton, [38] Sechrest and Sidani, [51] extensively discussed issues of flexibility in evaluation research, a combination of models and triangulation of approaches. A number of questions were posed: Why would an evaluator have to follow a specific model in the evaluation practice? What is the benefit of moving along with certain lines and working within a tight predetermined schedule?

Certainly there is a benefit of keeping evaluation activities organised in a systematic manner, which provides definite purposes, objectives, goals, methods and strives for certain outcomes. However, the free conceptualisation and the potential to uncover areas of strengths and weaknesses in a programme that are outside of a certain evaluation framework are obstructed. As Shadish [52] says, “following a certain model is like losing something of the beauty of experimenting, which lies on the researchers’ inability to fully control what happens when a new intervention is applied”. It may also impede the ability to discover new things, which the researcher could not contemplate or foresee. Models and specific approaches in evaluation can have an assisting role. They can provide alternatives, ideas and concepts that can help evaluators to identify and distinguish among different approaches for formulating the appropriate evaluation strategy for their own investigation. If we compare and contrast evaluation with art, it may be argued that as a painter uses a model without suppressing the free conceptualisation and inspiration of the artist, an evaluator can use a model to synthesise a tailor-made evaluation approach. Patton [38] suggests that models are not recipes but frameworks. He further considers evaluation as diplomacy, the art of possible, by stating that:

“The art of evaluation includes creating a design and gathering information that is appropriate for a specific situation and particular decision making context…………… Beauty is in the eye of the beholder and the evaluation beholders include a variety of stakeholders: decision makers, policy makers, funders, programme managers, staff, program participants, and the general public” ( page13). [38]

Scepticism regarding the utilisation of specific models in the evaluation practice and working within a tight predetermined schedule motivated educators and evaluators to view educational practice within its realistic framework, and to identify its unique context and mechanisms. Historical perspectives in educational evaluation led the modern evaluation scientists to rethink issues that Papanoutsos, [53] the pioneer of the educational reformation in Greece, underscored when speaking about education. He declared that the field of education differs from other fields since it works towards the spirit, the intellectuality, the ethos, and the persona of people. These characteristics are equally held by teachers and students, and there is a continuous conscious or unconscious exchange of messages from both sides. Education depends on historical backgrounds, cultures, socioeconomic developments of the existing social setting and political interactions and decisions. It involves social needs, personal preferences and ambitions, learning processes and developments. [53] It is, therefore, not certain that in the multidimensional and interactive context of education, an inflexible ranking objective indicator system would reveal all aspects of quality in an educational programme.

In the modern era of evaluation, following a certain model restricts experimentation and discovery. Synthesis of methods, creativity and naturalistic approaches were valued in the transforming era of evaluation. Educators, researchers and evaluation scientists valued issues related to the rapidly changing health care sector, modern health care environment and educational programmes, and discussed educational reform, quality and evaluation through an open dialogue with related stakeholders. [18,54] The demonstration of programme quality appears to be a major concern for nurse educators. Quality improvement, monitoring and assessment are important steps in accreditation processes for nursing education. [19]

Aspects of rigorous and yet realistic evaluation processes in nurse education are still in focus. This rigorous evaluation involves all the facets of the educational programmes, such as content, process and outcomes. [3,16] Within this framework, a great deal of evaluation activities and instruments were developed in an effort to explore subjective phenomena in education, and to measure a variety of learning experiences. [4] It was suggested that control group experimental designs were not adequate for the new demanding era of educational evaluation. In contrast, mixed balanced methods with emphasis on qualitative research approaches were considered more useful in the evaluation of educational programmes. [16,17,55] Lastly, as Ogrinc and Batalden [5] put it, speaking about realist evaluation, educational programmes and their evaluation is a challenging issue that requires more than a yes or no answer. The complex context of education requires explanations on why a programme is successful or not, and answers “what works, for whom, and in what circumstances”. [46]

Although there are sufficient models that support the measurement of knowledge and skills, the unique nature of the health care educational context necessitates the use of evaluation activities with unique qualities, such as realist evaluation, that links the context, mechanisms and outcome patterns. Models and specific approaches in evaluation can have an assisting role by providing alternatives, ideas and concepts that can help to identify and distinguish among different approaches and principles for formulating the appropriate evaluation strategy for our own unique investigation. Existing models can be used in a complementary manner or as a foundation for developing new evaluation strategies. From the historical review of the evaluation models it was also clearly understood that the idiosyncrasies of each situation or context must be appraised by the evaluation researchers as requiring tailor-made evaluation approaches and not necessarily amenable to pre-existing models. This is also supported by the uniqueness of the learning context, which requires active participation in evaluation process by teacher and student and the need for mutual cooperation in evaluation activities.

Conclusion

Synthesis of evaluation models is not only possible but also evident most of the time, since the evaluator’s work is seldom guided by and directly built on specific evaluation models. The efforts of some evaluation researchers to facilitate the application of evaluation in practice led to a merger of philosophies from different fields and finally led to the emergence of innovative thinking in the area of evaluation. The models and methods of evaluation are a representation of the imperfect real world of evaluation, and as such should be viewed with caution. As education has a unique nature based on people’s values and characteristics, they can be viewed as the stimulus to expand work in evaluation by constructing exceptional evaluation frameworks rooted in concepts of realism, the significance of values and the unique attributes of individuals.

Evaluation in modern educational contexts additionally requires a number of qualities on behalf of the evaluators, such as openness, commitment, expertise, willingness to change, self-confidence, team-work, administrative support, infrastructure, resources, experimentation, willingness to fail, vision and optimism. The experience and knowledge that the history of evaluation has offered us, helped contemporary evaluators to pave the way of evaluation with innovative methods and models that support flexibility and eliminate stereotypes.

2744

References

- Oerman M.H., and Gaberson K.B. Evaluation and Testing in Nursing Education. 3rd edition. Eds., Springer Publishing Company, New York, 2009.

- Keating S.B. Curriculum development and evaluation in Nursing. 2nd edition. Eds., Springer Publishing Company, New York, 2011.

- Roxburgh M, Watson R, Holland K, Johnson M, Lauder W, Topping K. A review of curriculum evaluation in United Kingdom nursing education. Nurse Education Today 2008; 28: 881-889.

- Foley D. Development of a Visual Analogue Scale to Measure Curriculum Outcomes. Journal of Nursing Education 2008; 47(5): 209-213.

- Ogrinc G, Batalden P. Realist Evaluation as a framework for the assessment of teaching about the improvement of care. The Journal of Nursing Education 2009; 48 (12): 661-668.

- Coryn C, Noakes L, Westine C, Schroter D. A Systematic Review of Theory-Driven Evaluation Practice From 1990 to 2009. American Journal of Evaluation 2011; 32(2): 199-226.

- Lateef F. Healthcare Professional Education: 10 important things for the next decade. South-East Asian Journal of Medical Education 2011; 5(1): 10-17

- O'Neill E.L. Comprehensive curriculum evaluation. Journal of Nursing Education 1986; 25(1): 37-39.

- Hogg S.A. The problem-solving curriculum evaluation and development model. Nurse Education Today 1990; 10(2): 104-110.

- Rolfe G. Listening to students: course evaluation as action research. Nurse Education Today 1994; 14(3): 223-227.

- Watson J.E., Herbener D. Programme evaluation in nursing education: the state of the art. Journal of Advanced Nursing 1990; 15(3): 316-323.

- Kenworthy N., Nicklin P. Teaching and Assessing in Nursing Practice: An experiential approach. Eds., Scutari Press, London, 1989.

- Lawton D. In Gordon P (ed) The study of the curriculum. Batsford Academic, London, 1981.

- Shapiro LT. Training effectiveness Handbook. A high-result system for design, delivery, and evaluation. Eds., McGraw-Hill, New York, 1995.

- Grant-Haworth J., Conrad C.F. “Emblems of Quality in Higher Education. Developing and Sustaining High Quality Programs”. Allyn and Bacon, Boston, 1997.

- Attree M. Evaluating health care education: Issues and methods. Nurse Education Today 2006; 26 : 640-646.

- Stone N. Evaluating interprofessional education: The tautological need for interdisciplinary approaches. Journal of Interprofessional Care 2006;20(3):260-275.

- Giddens JF, Morton N. Curriculum Evaluation. Report Card: An evaluation of a concept-based curriculum. Nursing Education Perspectives 2010; 31(6): 372-377.

- Ellis P, and Halstead J. Understanding the Commission on Collegiate Nursing Education Accreditation Process and the Role of the Continuous Improvement Progress Report. Journal of Professional Nursing 2012; 28(1): 18-26.

- Tyler, R. W. General statement on evaluation. Journal of Educational Research 1942; 35: 492–501.

- Whiteley S.J. Evaluation of nursing education programmes-theory and practice. International Journal of Nursing Studies 1992; 29(3): 315-323.

- Sconce C., Howard J. Curriculum evaluation a new approach. Nurse Education Today 1994; 14: 280-286.

- Parlett M.R., Hamilton D.F. Evaluation as illumination: a new approach to the study of innovatory programmes. In: Parlett, Dearden (eds.) Introduction to illuminative evaluation. Studies in Higher Education. Pacific Soundings Press: 1972: 9-29.

- Suchman E. Evaluative research: Principles and practice in public service and social action programs. Eds., Russell Sage, New York, 1967.

- Scriven M. The methodology of evaluation. In R. E. Stake (Ed.), Curriculum evaluation. American Educational Research Association Monograph Series on Evaluation No. 1. Rand McNally, Boston, 1967.

- Owston R. Models and Methods for Evaluation. York University, Toronto, Canada 2010. Website : https://faculty.ksu.edu.sa/Alhassan/Hand book on research in educational communication/ER5849x_C045.fm.pdf. Accessed on 1/10/2012.

- Stake R. The countenance of educational evaluation. In: Hamilton D et al. 1977 Beyond the numbers game. Macmillan Education, 1967: 146-155.

- Stake, R. E. Evaluating the Arts in Education: A Responsive Approach. Columbus, OH: Merrill 1975.

- Stufflebeam D.L. Educational Evaluation and Decision Making. Peacock Publishing, Itasca, Illinois, 1971.

- Stufflebeam D.L. CIPP Evaluation Model Checklist. (2nd Edition) A tool for applying the CIPP Model to assess long-term enterprises. Intended for use by evaluators and evaluation clients/stakeholders. Evaluation Checklists Project 2007. Available from: www.wmich.edu/evalctr/checklists.

- Stufflebeam, D.L. Meta-evaluation. Western Michigan University 1974 Available From: https://www.wmich.edu/evalctr/pubs/ ops/ops03.pdf Accessed on : 2/2/ 2008.

- Worthen B., & Sanders J. Educational evaluation: Alternative approaches and practical guidelines. White Plains, NY,Longman 1987.

- Weiss C. H. Evaluation Research: Methods for Assessing Program Effectiveness. Prentice Hall, Itasca, Illinois, 1972.

- Friere P. Pedagogy of the oppressed. Translated by M B Ramer. Penguin Harmondsworth, UK, 1973.

- Gange R. The conditions of learning. Eds., Holt, Rinehart and Winston, New York, 1977.

- Knowles M.S. The adult learner-A neglected species, Eds., Gulf Publishing, USA, 1978.

- Patton M.Q. “Qualitative Evaluation and Research Methods”. 2nd ed. Sage Publications, Newbury Park, 1990.

- Patton, M. Q. Utilization-focused evaluation. CA: Sage Publications, Beverly Hills, 1978.

- Stenhouse L. An introduction to curriculum research and development. Heinemann Educational Books 1975. In: Whiteley S. Evaluation of nursing education programmes-theory and practice. International Journal of Nursing Studies 1992; 29(3): 315-323.

- Eisner E. Educational connoisseurship and criticism: Their form and function in educational evaluation. Journal of Aesthetic Evaluation of Education 1976; 10: 135-150.

- Eisner E. The art of educational evaluation: A personal view. The Falmer Press, Philadelphia, PA, 1985.

- Eisner E. The enlightened eye: Qualitative inquiry and the enhancement of educational practice. Merrill, Upper Saddle River, NJ, 1998.

- Scriven M. Evaluation Thesaurus (3rd Edition) Edgepress, California. 1981.

- Guba EG, Lincoln YS. Effective Evaluation: Improving the Usefulness of Evaluation Results through Responsive and Naturalistic Approaches. San Francisco: Jossey – Bass. In: Patton M.Q. (1990) Qualitative Evaluation and Research Methods. 2nd edition. Sage Publications, London,1981.

- Pawson R and Tilley N. Realistic Evaluation. Sage Publications, Thousand Oaks, CA, 1997.

- Julnes G, Mark MM, Henry GT. Promoting Realism in Evaluation. Realistic Evaluation and the Broader Context. Evaluation 1998; 4(4): 483-504.

- House ER. Putting Things Together Coherently: Logic and Justice. In D. Fournier (ed) Reasoning in Evaluation: Inferential Links and Leaps. New Directions for Evaluation, no 68. Jossey Bass, San Francisco, 1995.

- Kirkpatrick, D. L. Evaluating Training Programs: The Four Levels, 2 ed. Berrett-Koehler, San Francisco, CA, 2001.

- Zimmerman MA., and Holden DJ. A Practical Guide to Program Evaluation Planning: Theory and Case Examples. SAGE Publications 2009.

- Sechrest L, Sidani S. Quantitative and Qualitative methods: Is there an alternative? Evaluation and Program Planning 1995; 18(1): 77-87.

- Shadish W.R. Philosophy of science and the quantitative-qualitative debates: thirteen common errors. Evaluation and Program Planning 1995; 18 (1): 63-75.

- Papanoutsos E.P. «Meters of our era. The crisis-the philosophy - the art - the education - the people - the man». Philippotis Publications, Athens,1981 (In Greek).

- Forbes MO, Hickey MT. Curriculum Reform in Baccalaureate Nursing Education: Review of the Literature. International Journal of Nursing Education Scholarship 2009; 6(1): 1-16.

- Stavropoulou A., Kelesi M. Concepts and Methods of Evaluation in Nursing Education – A Methodological Challenge. Health Science Journal 2011; 6 (1): 11–23.