Keywords

Data envelopment analysis; Efficiency change; Hospital efficiency; Malmquist productivity index; Productivity; Technological change; Uganda

Abbreviations

AHSPR: Annual Health Sector Performance Reports; CRS: Constant Returns to Scale; DEA: Data Envelopment Analysis; DMU: Decision Making Unit; DRS: Decreasing Returns to Scale; EFFCH: Efficiency Change; FY: Financial Year; HSSIP: Health Sector Strategy and Investment plan; IRS: Increasing Returns to Scale; MOH: Ministry of Health; MPI: Malmquist Total Factor Productivity Index (MPI); NGOs: Non-Governmental Organization; OPD: Out Patient Department; PNFP: Private Not For Profit; SECH: Scale efficiency change; TECH: Technical Efficiency Change; VRS: Variable Returns to Scale

Introduction

Background

The World Health Organization describes a health system as consisting of all the organizations, institutions, resources and people who primary purpose is to improve health [1]. As key institutions in a health system, hospitals offer a range of preventive, promotive, rehabilitative and curative health services.

Uganda is served by a healthcare delivery system that comprises of the public sector, private sector and the non-governmental organizations (NGO)/private not profit sector. At the apex of the healthcare delivery system are national referrals hospitals below which are regional referral hospitals. There is a district health care delivery system comprising of district hospitals, health center IVs, IIIs and health IIs, and village health teams. The district hospitals act as referral centers for the district health care delivery system. The regional referral hospitals which are the focus of our study, act as referral centers for several district hospitals within their catchment area [2].

Essential clinical care is one of the key elements of the Uganda Minimum Health care package and indeed a major purpose of any health system. Hospitals are the main providers of essential clinical care. In the public eyes, hospitals are the “face” of the health system upon which the public assesses the perceived quality services provided. Hospitals continue to be major users of health care resources and contributors to the outputs of inpatient, outpatient and preventive care.

Hospitals represent a significant proportion of health expenditures. In Uganda for example, about 26% of total health expenditure is incurred in hospitals [3]. Thus improvement in the efficiency and productivity of hospitals may result in large savings in healthcare expenditures, which could be devoted to other services such as prevention. Additionally, formulating good policies and strengthening institutions like hospitals increases the productivity not just of additional spending but also of existing spending commitments. Institutions like hospitals which use a large proportion of the health budget would be natural targets for productivity improvements. There is thus need to track their efficiency and productivity over time and to investigate the drivers of any changes in order to take the required corrective action.

According to Hensher, there are extensive inefficiencies in low and middle income countries [4]. Causes of these inefficiencies include failure to minimize inputs used in health service delivery, use of more costly inputs, operating service delivery at an inappropriate scale and poor remuneration of health workers that does not encourage good performance [4]. Such inefficiencies in the hospital sector could account for up to 10% of total health spending [4]

The Uganda National Health Policy indicates that efficiency is currently not well addressed in the way resources are mobilized, allocated and used [5]. Thus, one of the Uganda Ministry of Health objectives in the Health sector strategy and investment plan is to improve the efficiency and effectiveness of health services [6]. For this to be achieved, information on the current level and trends in efficiency and productivity of the various health delivery institutions will be required.

In the African region, DEA has been applied to analyze the efficiency of hospitals in several countries, for example Namibia [7], Burkina Faso [8], Ethiopia [9], Nigeria [10], Eritrea [11] and Uganda [12]. However, only a few of the studies have addressed productivity growth. This study is intended to add to the growing body of literature on hospital productivity in various countries for example Angola [13], Botswana [14], South Africa [15], Canada [16] Portugal [17], Ireland [18], India [19], China [20], Greece [21], Brazil [22], Taiwan [23]; and to provide more recent evidence from Uganda since the last published study used 1998-2003 data [12].

The specific objectives of this study are to: (i) measure changes in the technical and scale efficiency of regional referral hospitals in Uganda over a five year period (ii) measure changes in productivity of the regional referral hospitals over the same time period and (iii) identify the drivers of any observed productivity changes during the study period

The study provides evidence that might guide Uganda’s health policy-makers to design policy and managerial interventions for achieving their stated objective of improving efficiency and productivity of health services with a focus on hospitals.

Methods

This is a longitudinal study using five year secondary data (FY 2009/10 to 2013/14). We use Data Envelopment Analysis (DEA) to measure technical and scale efficiency of the individual hospitals during the study period. Additionally, we use the Malmquist Productivity Index (MPI) to measure hospital productivity and its changes over the study period.

Conceptual framework

Hospitals as production units combine multiple health system inputs (e.g., health workforce, medical products, non-medical supplies, clinical technologies, beds, building space, ambulances) to produce multiple health service outputs through a production process. While the ultimate output of healthcare is the marginal change in health status, this is difficult to measure in most data sets, and so intermediate outputs – volume of care (e.g., number of operations and outpatient visits) – usually become the proxy study outputs.

Two types of efficiency can be defined; technical and allocate efficiency. Technical efficiency refers to organizing available resources in such a way that the maximum feasible output is produced. In such a situation, no organization can yield a higher output with the available resources. Allocative efficiency or price efficiency on the other hand refers to use of the available budget in such a way that the most productive combination of resources is utilized taking into consideration the relative prices of resources. In such a situation, taking into consideration the available budget, no alternative combination of resources would provide a higher output [24]. This study focuses on technical efficiency. In the hospital context, technical efficiency means making the best use of available health system inputs and the existing technology.

Productivity refers to how much output is obtained from the available resources; it is the ratio of output y (what is produced) over input x (the resources used). An example is labor productivity, where output might be approximated by gross domestic product (GDP) of a country with input measured in terms of total labor hours employed in a given time period. Labor productivity is an example of a measure of single-factor productivity. This study however focuses on a broader measure of productivity that includes all of the services produced and accounts for all of the resources (not just labor) used to produce these services in a hospital context. This is referred to as multifactor productivity or total factor productivity (TFP). TFP is thus the ratio of all of outputs produced over all of the input employed to produce them.

Whereas productivity simply refers to the ratio of output to input, efficiency involves a comparison of observed output and the maximum potential output obtainable from a given input; or comparison of observed input to the minimum potential input required producing the output. Equally efficient units may well have different productivity depending on their scale of operation, as well as other differences in their production possibility sets.

Data envelopment analysis (DEA)

DEA is a non-parametric, data driven approach that uses linear programming techniques to compute the efficiency scores for each decision making unit (DMU) in a data set [25]. Examples of such DMUs may include hospitals [26]. In a hypothetical scenario of a hospital using one health system input to produce one health service output, the efficiency of such a hospital would be obtained by dividing the quantity of that output by the quantity of input. In reality, however, hospitals use multiple inputs (e.g., Staff, medicines, medical equipment) to produce multiple outputs (e.g., curative services, preventive services etc.). In this scenario, the efficiency of the hospitals is expressed as the weighted sum of health service outputs divided by the weighted sum of the health system inputs [26]. DEA can be used for such a calculation.

DEA solves as many linear programming problems as the number of the DMUs in the study sample [27]. In DEA the efficiency of a DMU (referral hospitals in this case) is measured relative to a group's observed best practice; the notional ‘production frontier’ representing optimal efficiency. Thus, the benchmark against which to compare the efficiency of a particular referral hospital is determined by the group of referral hospitals in the study. All DMUs lay on or below the ‘production frontier’. DMUs that are technically efficient lay on the production frontier and have a score of 1 or 100%, whereas inefficient ones lie below the production frontier and have efficiency scores of less than 1 (i.e., less than 100%).

Besides estimating a hospitals relative efficiency based on its location on the production frontier, DEA can indicated the returns to scale being experienced by a hospital. Returns to scale refers to the quantitative change in output of a firm or industry resulting from a proportionate increase in all inputs. A hospital manifests an increasing return to scale (IRS) or economies of scale when hospital output increases by a larger proportion than the increase in health system inputs, e.g., when doubling of all inputs leads to more than a doubling of outputs. On the other hand, a hospital manifests decreasing returns to scale or diseconomies of scale when a doubling of inputs leads to less than a doubling of outputs. Alternatively, a hospital can manifest a constant return to scale (CRS) when a proportionate change in inputs results in the same proportionate change in outputs.

For our study, we assumed that in reality, there is variability in returns to scale of the various hospitals in out sample. In order to allow for that variability, we estimated the linear programming problem below (1) for each hospital in our sample [27]:

Max E0 = ΣUrYrjo +Uo

(1)

(1)

(2)

(2)

Ur,Vi≥0

The relative efficiency score (E) lies between 0 (totally inefficient) and 1 (optimal technical efficiency).

Malmquist productivity index (MPI)

There are several productivity measures which explicitly link efficiency and productivity. We opted to use the DEA-based Malmquist Productivity Index (MPI) to study efficiency and productivity changes over the considered period of time for a number of reasons: it requires information solely on quantities of inputs and outputs and not on their prices; it does not require the imposition of a functional form on the structure of production technology; it easily accommodates multiple hospital inputs and outputs; and it can be broken down into the constituent sources of productivity change, i.e., efficiency changes and technological changes [28].

Thus one of the key insights that the MPI can provide is the identification of the contributions of innovation (technical change or shifts in the frontier of technology) and diffusion and learning (catching up or efficiency change) to productivity growth. An increase in the efficiency level can be interpreted as a move by the hospital to ‘catch-up’ with the efficiency frontier. Improvement in hospital’s health technology shifts the efficiency frontier upward [29].

We used output oriented MPI because hospitals in our sample have a more or less fixed quantity of inputs and managers have more managerial flexibility in controlling outputs. The outputoriented MPI is defined as the geometric mean of two periods’ productivity indices. The index attains a value greater than, equal to, or less than one if a hospital has experienced productivity growth, stagnation or productivity decline respectively, net of the contribution of scale economies, between periods t and t+1. The MPI can be broken down into the various sources of productivity change, namely Technical Change and Efficiency change [30].

Technical change (TECH) is a measure of the change in the hospital production technology; it measures the shift in technology use between years t and t + 1. TECH is greater than, equal to or less than one when the technological best practice is improving, unchanged, or deteriorating, respectively [31].

Efficiency change (EFFCH) on the other hand is the change in the gap between observed production and maximum feasible production (production frontier) between years t and t +1. EFFCH is greater than one, equal to or less than one if a hospital is moving closer to, unchanging or diverging from the production frontier.

Efficiency change when estimated under the assumption of constant returns to scale (CRS) can be further broken down into Pure Efficiency Change (PEFFCH) and Scale Efficiency Change (SEFFCH).

Scale efficiency change refers to productivity change resulting from scale change that brings the hospital closer to or further away from the optimal scale of outputs as identified by a variable returns to scale technology [30]. Scale efficiency change between years t and t+1 thus measures changes in efficiency due to the movement towards or away from the point of optimal hospital scale. It captures the deviations between the variable returns to scale (VRS) and constant returns to scale (CRS) technology for the observed inputs. Scale efficiency change is expressed as a value of less than, equal to, or greater than one if a hospital’s scale of production contributes negatively, not at all, or positively to productivity change. Pure Efficiency Change on the other hand measures change in technical efficiency under the assumption of a variable returns to scale (VRS) technology [30].

In conclusion, the computation of Malmquist indices of productivity growth over a sampled period of time addresses 3 issues: (i) measurement of productivity growth over the period (ii) decomposition of changes in productivity growth into a ‘catchingup’ effect (technical efficiency change) and a ‘frontier shift’ effect (technological change); and (iii) decomposition of the ‘catchingup’ effect (technical efficiency change) to identify the main cause of improvement which could be enhancements in pure technical efficiency or increases in scale efficiency.

Sample and data

For the financial year 2009/10 (base year), there were a total of 13 public sector regional referral hospitals and 4 large private not for profit (PNFP) hospitals operational in Uganda. These 17 hospitals are considered regional referral hospitals. This number had risen to 18 regional referral hospitals in financial year 2013/14 (final year) with the addition of one public sector referral hospital. Our study sample comprised of the 13 public sector regional referral hospitals which were active over the five year study period (FY 2009/10 to 2013/14).

The study used two inputs: (i) total number of health workers (ii) total number of hospital beds and three outputs (i) number of outpatient department visits, (ii) number of inpatient days and (ii) total number of deliveries. The choice of inputs and outputs was based on the published hospital efficiency literature in the African Region [7-12] and availability of data for all the years studied.

Data for the 13 public sector regional referral hospitals for the FY 2009/10 to 2013/14 (5 years) was collected from the MOH annual Health Sector Performance reports for the respective financial years (July 1-June 30).

The data was for the large part complete; missing data on inputs was filled in by using values from the previous period, whereas missing data on outputs was filled in by using the average for all hospitals during the reference period. We were not able to verify whether all the hospitals had exactly the same standards in terms of type of services provided, quality of care provided, case mix, qualification and experience of staff, working schedules, functional building capacity, hospital technology, etc.

We used STATA 13 to estimate the yearly hospital efficiency and the Malmquist Productivity Indexes.

Ethical Clearance

This study is entirely an analysis of data from published secondary sources. Since human subjects were not involved, it did not require ethical clearance.

Results

Hospital inputs and outputs

Table 1 presents data on the average inputs and outputs per financial year for the five financial years under study.

| INPUTS |

|

| FY |

2009/2010 |

2010/2011 |

2011/2012 |

2012/2013 |

2013/2014 |

2009/10-2013/14 |

|

| |

Staff |

Beds |

Staff |

Beds |

Staff |

Beds |

Staff |

Beds |

Staff |

Beds |

Average Staff |

Average. Beds |

|

| Total |

2614 |

4051 |

3325 |

4025 |

3608 |

3608 |

2232 |

3773 |

3588 |

4156 |

|

|

|

| Max |

320 |

460 |

370 |

460 |

345 |

429 |

251 |

443 |

386 |

447 |

|

|

|

| Min |

109 |

120 |

157 |

120 |

240 |

120 |

97 |

100 |

143 |

160 |

|

|

|

| Mean |

201 |

312 |

256 |

310 |

278 |

278 |

172 |

290 |

276 |

320 |

236 |

302 |

|

| OUTPUTS |

| FY |

2009/2010 |

2010/2011 |

2011/2012 |

2012/2013 |

2013/2014 |

2009/10-2013/14 |

| |

OPD |

DEL |

PATDAYS |

OPD |

DEL |

PATDAYS |

OPD |

DEL |

PATDAYS |

OPD |

DEL |

PATDAYS |

OPD |

DEL |

PATDAYS |

OPD |

DEL |

PATDAYS |

| Total |

1360125 |

53794 |

1320454 |

1526571 |

61660 |

1271741 |

1580313 |

72324 |

1221371 |

1901306 |

67146 |

1221845 |

2129102 |

73018 |

1235194 |

8497417 |

327942 |

6270605 |

| Max |

248822 |

7459 |

143436 |

206090 |

7885 |

150128 |

181939 |

8998 |

282626 |

209032 |

10181 |

176671 |

301855 |

10019 |

115968 |

|

|

|

| Min |

34258 |

423 |

49425 |

48325 |

462 |

42185 |

39306 |

491 |

8830 |

53051 |

503 |

46010 |

43323 |

708 |

70507 |

|

|

|

| Mean |

104625 |

4138 |

101573 |

117429 |

4743 |

97826 |

121563 |

5563 |

93952 |

146254 |

5165 |

93988 |

163777 |

5617 |

95015 |

653647 |

25226 |

482354 |

Table 1 Hospital inputs and out puts per financial year.

The data shows that overall during the five year period, there was growth in the number of hospitals beds and total staff implying that the hospitals in our study had experienced some capacity and scale development. However, overall percentage growth in total staff was almost twelve times the growth in hospital beds. Generally, the total number of staff grew by 37% between the 2009/10 and 2013/14 financial year. The overall growth in the number of hospital beds between FY 2009/10 and 2013/14 was however low at 3%.

Overall, between the 2009/10 and 2013/14 FY, the total number of out-patient department visits attended to by the hospitals grew by 57%; total deliveries grew by 36% while the total number of inpatient days declined by 6%. However, year on year growth varied with declines observed in some of the intervening financial years.

Over the five year period, the hospitals in our study attended to a total of 8,497,417 out-patient department visits, 327,942 deliveries and provided 6,270,605 in-patient days’ worth of care. During the five year period, the average hospital had 236 staff, 302 beds and on average provided 653,647 OPD visits, 25,226 deliveries and 482,354 in-patient days per financial year.

Individual hospital technical and scale efficiency

Table 2 presents data on individual hospital technical and scale efficiency during FY 2009/10 to FY 2010/14. The table shows individual DEA hospital scores for constant returns to scale technical efficiency, variable returns to scale technical efficiency, scale efficiency, and returns to scale.

| |

Efficiency 2009/2010 |

Efficiency 2010/2011 |

Efficiency 2011/2012 |

Efficiency 2012/2013 |

Efficiency2013/2014 |

Average Efficiency 2009/10-2013/2014 |

No. of times on frontier |

| Hospital |

CRS_TE |

VRS_TE |

SCALE |

RTS |

CRS_TE |

VRS_TE |

SCALE |

RTS |

CRS_TE |

VRS_TE |

SCALE |

RTS |

CRS_TE |

VRS_TE |

SCALE |

RTS |

CRS_TE |

VRS_TE |

SCALE |

RTS |

CRS_TE |

VRS_TE |

SCALE |

CRS_TE |

VRS_TE |

SCALE |

| Arua |

0.81 |

0.84 |

0.96 |

IRS |

1.00 |

1.00 |

1.00 |

CRS |

0.94 |

0.94 |

1.00 |

CRS |

0.77 |

0.86 |

0.90 |

DRS |

0.78 |

0.84 |

0.93 |

DRS |

0.86 |

0.89 |

0.96 |

1 |

1 |

2 |

| Fort Portal |

1.00 |

1.00 |

1.00 |

CRS |

1.00 |

1.00 |

1.00 |

CRS |

0.81 |

0.84 |

0.96 |

IRS |

0.76 |

0.92 |

0.83 |

DRS |

0.91 |

1.00 |

0.91 |

DRS |

0.90 |

0.95 |

0.94 |

2 |

3 |

2 |

| Gulu |

0.94 |

0.97 |

0.97 |

IRS |

0.62 |

0.65 |

0.95 |

IRS |

0.94 |

0.97 |

0.97 |

IRS |

1.00 |

1.00 |

1.00 |

CRS |

0.75 |

0.81 |

0.92 |

IRS |

0.85 |

0.88 |

0.96 |

1 |

1 |

1 |

| Hoima |

0.93 |

1.00 |

0.93 |

IRS |

0.80 |

0.83 |

0.97 |

IRS |

0.86 |

0.86 |

1.00 |

CRS |

1.00 |

1.00 |

1.00 |

CRS |

0.92 |

0.92 |

1.00 |

CRS |

0.90 |

0.92 |

0.98 |

1 |

2 |

3 |

| Jinja |

0.87 |

0.98 |

0.89 |

DRS |

0.70 |

0.88 |

0.80 |

DRS |

0.61 |

0.73 |

0.83 |

IRS |

0.73 |

0.88 |

0.84 |

DRS |

0.72 |

0.96 |

0.75 |

DRS |

0.73 |

0.89 |

0.82 |

0 |

0 |

0 |

| Kabale |

0.73 |

0.75 |

0.97 |

IRS |

1.00 |

1.00 |

1.00 |

CRS |

0.45 |

0.47 |

0.96 |

IRS |

0.81 |

0.84 |

0.96 |

IRS |

1.00 |

1.00 |

1.00 |

CRS |

0.80 |

0.81 |

0.98 |

2 |

2 |

2 |

| Masaka |

0.83 |

0.83 |

1.00 |

IRS |

0.98 |

1.00 |

0.98 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

0.98 |

1.00 |

0.98 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

0.96 |

0.97 |

0.99 |

2 |

4 |

2 |

| Mbale |

0.83 |

1.00 |

0.83 |

DRS |

0.88 |

1.00 |

0.88 |

DRS |

0.87 |

0.99 |

0.88 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

0.82 |

1.00 |

0.82 |

DRS |

0.88 |

1.00 |

0.88 |

1 |

4 |

1 |

| Soroti |

1.00 |

1.00 |

1.00 |

CRS |

0.88 |

0.97 |

0.91 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

0.90 |

0.94 |

0.95 |

DRS |

0.95 |

1.00 |

0.95 |

DRS |

0.94 |

0.98 |

0.96 |

2 |

3 |

2 |

| Lira |

0.82 |

0.82 |

1.00 |

IRS |

0.85 |

0.93 |

0.92 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

0.90 |

1.00 |

0.90 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

0.92 |

0.95 |

0.96 |

2 |

3 |

2 |

| Mbarara |

1.00 |

1.00 |

1.00 |

CRS |

0.98 |

1.00 |

0.98 |

DRS |

1.00 |

1.00 |

1.00 |

CRS |

1.00 |

1.00 |

1.00 |

CRS |

0.98 |

1.00 |

0.98 |

DRS |

0.99 |

1.00 |

0.99 |

3 |

5 |

3 |

| Mubende |

1.00 |

1.00 |

1.00 |

CRS |

1.00 |

1.00 |

1.00 |

CRS |

0.72 |

0.72 |

1.00 |

CRS |

0.90 |

1.00 |

0.90 |

IRS |

1.00 |

1.00 |

1.00 |

CRS |

0.92 |

0.94 |

0.98 |

3 |

4 |

4 |

| Moroto |

0.81 |

0.87 |

0.93 |

DRS |

0.68 |

0.68 |

1.00 |

CRS |

0.48 |

0.48 |

1.00 |

CRS |

1.00 |

1.00 |

1.00 |

CRS |

1.00 |

1.00 |

1.00 |

CRS |

0.79 |

0.80 |

0.99 |

2 |

2 |

4 |

| Max |

1.00 |

1.00 |

1.00 |

|

1.00 |

1.00 |

1.00 |

|

1.00 |

1.00 |

1.00 |

|

1.00 |

1.00 |

1.00 |

|

1.00 |

1.00 |

1.00 |

|

|

|

|

|

|

|

| Min |

0.73 |

0.75 |

0.83 |

|

0.62 |

0.65 |

0.80 |

|

0.45 |

0.47 |

0.83 |

|

0.73 |

0.84 |

0.83 |

|

0.72 |

0.81 |

0.75 |

|

|

|

|

|

|

|

| Mean |

0.89 |

0.93 |

0.96 |

|

0.87 |

0.92 |

0.95 |

|

0.82 |

0.85 |

0.97 |

|

0.90 |

0.96 |

0.94 |

|

0.91 |

0.96 |

0.94 |

|

|

|

|

|

|

|

Table 2: Hospital’s technical and scale efficiency during FY 2009/10 to FY 2013/14.

Over the five year period, there was a general increase in the proportion of hospitals operating under constant returns to scale (CRS). This proportion increased from 31% in the FY 2009/10 to 38% the following FY before rising sharply to 62% in the 2013/14 FY. It then declined to 38% the following FY before rising to 46% in the 2013/14 FY. For these hospitals, their health service outputs would increase in the same proportion as any increase in health service inputs; these hospitals were operating at their most productive scale sizes.

Similarly, there was a general increase in the proportion of hospitals operating under decreasing returns to scale (DRS) over the five year period. This proportion doubled from 23% in FY 2009/10 to 46% in FY 2010/11, before sharply declining to 8% the following FY. It then increased sharply to 46% in the 2012/13 remaining constant during the 2014/14 FY. For these hospitals, their health service outputs would increase by a smaller proportion compared to any increase in health service inputs; these hospitals would have to reduce their size to achieve optimal scale

In contrast, there was a general decrease in the proportion of hospitals operating under increasing returns to scale (IRS). This proportion sharply decreased from 46% in FY 2009/10 to 15% in the following FY before rising to 31% during the 2011/2012 FY. It then declined to 15% the following year before registering a further decline to 8% in the 2013/24 FY. For these hospitals, their health service outputs would increase by a greater proportion compared to any increase in health service inputs; the hospitals would need to increase their size to achieve optimal scale i.e., the scale at which there are constant returns to scale in the relationship between inputs and outputs.

As Table 2 indicates, there were fluctuations in individual hospital efficiency scores from one FY to the next over the five year study period. The table also shows the number of times each individual hospital was on the efficiency frontier over the study period. None of the hospitals were on the constant returns to scale or variable returns to scale efficiency frontier all the time during the five year study period (score of 100%). The highest number of times an individual hospital was on the CRS efficiency frontier over the five year period was 3 times, with 2 hospitals (15%) achieving this. The highest number of times an individual hospital was on the scale efficiency frontier over the five year time period was 4 times with 2 hospitals (15%) achieving this. Regarding Variable Returns to Scale Technical efficiency, one hospital was on the variable returns to scale technical efficiency frontier all the time over the study period (scale of 100%). This hospital can be considered to be the most efficient hospital in our sample over the study time period. One hospital did not appear at all on either the Constant returns to scale, variable returns to scale or scale Efficiency frontier. This hospital can be considered to be the least efficient hospital in our sample over the study period.

Changes in mean efficiency scores

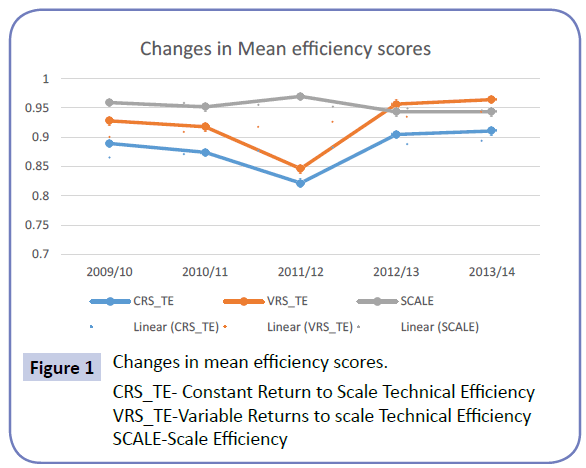

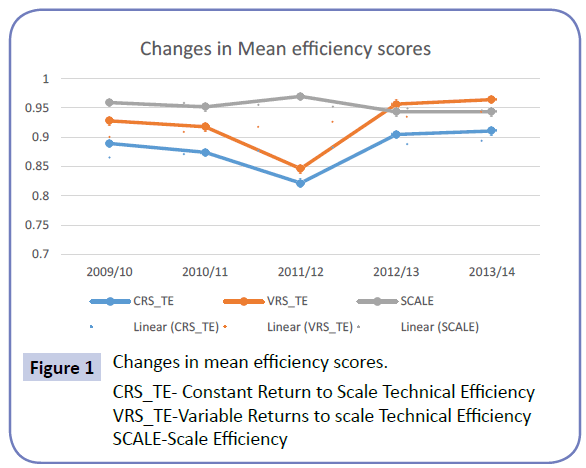

Figure 1 shows the changes in the mean Constant returns to scale, Variable returns to scale and scale efficiency scores of the hospitals in our sample over the five year study period.

Figure 1: Changes in mean efficiency scores.

CRS_TE- Constant Return to Scale Technical Efficiency

VRS_TE-Variable Returns to scale Technical Efficiency

SCALE-Scale Efficiency

Generally, over the five year study period, there was an increase in the mean Constant returns to scale, and mean Variable returns to scale efficiency scores; while there was a decline in the mean scale efficiency scores for the hospitals in our sample. As can be seen from Figure 1, changes in average Constant returns to scale and Variable returns to scale efficiency scores mirrored each other increasing and decreasing in the same time periods.

The mean Variable Returns to Scale mean technical efficiency score for the hospitals in our sample declined marginally from 93% in FY 2009/10 to 92% in the 2010/11 FY, declining further to 85% in 2011/12 FY. It then increased to 96% in the 2012/13 and stayed constant at 96% in the 2013/14 FY. Over the five year study period, the mean Variables return to scale efficiency score for all the hospitals in the sample was 92% meaning that if run efficiently, on average, the hospitals could have produced 8% more output (OPD visits, deliveries and in-patient days) for the same volume of inputs.

Similarly, the mean constant returns to scale technical efficiency score for the hospitals in our sample declined marginally from 89% in FY 2009/10 to 87% in the 2010/11 FY, declining further to 82% in 2011/12 FY. It then increased to 90% in the 2012/13 and further increased marginally to 91% in the 2013/14 FY. Over the five year study period, the mean constant returns to scale efficiency score for all the hospitals in the sample were 88%.

In contrast, the mean scale efficiency technical score for the hospitals in our sample remained almost constant in FY 2009/10 (95.9%) and FY (95.2%) before increasing slightly to 0.969986 in FY 2011/12. It then declined to 94% in FY 2012/13 and stayed constant in FY 2013/14 (94%). Over the five year study period, the average scale efficiency score for all the hospitals in the sample was 95%.

Changes in technical efficiency

Over the five year period, there was a general increase in the number of hospitals that were constant return to scale technically efficient and those that were variable returns to scale technically efficient, though the increase in the latter was marginal.

The proportion of hospitals that were Variable returns to scale technical efficient (Pure technical efficient) increased from 46% in FY 2009/10 to 54% the following FY before declining in FY 2011/12. This proportion then doubled to 62% the following FY before increasing slightly to 69% in the 2013/14 FY.

During the 5 year study period, the average pure technical efficiency score of the sampled hospitals was 92%. This means that on average, the pure technical inefficient hospitals would need to increase their outputs by 8% in order to become efficient. The lowest individual hospital pure technical efficiency score registered during the five year period was 46%, registered during FY 2011/12.

During the 5 year study period, the proportion of hospitals that were constant returns to scale technically efficient stayed constant at 31% from for the first three FY (FY 2009/10 - FY 2011/12) before increasing to 38% in the FY 2012/13 and stayed constant in the FY 2013/14. The average constant returns to scale technical efficiency score over the five year time period was 88% meaning that on average the constant returns to scale technically inefficient hospitals would need to increase their outputs by 12% to become constant returns to scale technically efficient. The lowest average constant returns to scale technical efficiency score registered during the five year period was 45% during the 2011/12 FY.

Changes in scale efficiency

During the 5 year study period, the proportion of hospitals that were scale efficient (scale efficiency score of 100%) showed a general positive trend, increasing from 31% in the 2009/10 FY to 38% the following FY, before increasing sharply to 62% in the 2011/12 FY. It then declined to 38% the following year before increasing to 46% in the 2013/14 FY.

The average scale efficiency score over the five year time period was 95% meaning that on average the scale inefficient hospitals would need to increase their outputs by 5 % to become scale efficient. The lowest average scale efficiency score registered during the five year period was 94% during the 2012/13 and 2013/14 FY.

Scope for output increases

Table 3 shows the total output increases that would have been needed each financial year to make the variable returns to scale inefficient hospitals efficient during the five year study period. In the 2009/10 FY, for example, the inefficient hospitals combined would need to increase outpatient visits by 828,896 (61%), deliveries by 1,510 (3%) and inpatient days by 1,320,454 (5%) so as to become efficient.

| |

OPD |

Deliveries |

Patient Days |

| FY |

Actual |

Shortfall |

% shortfall |

Actual |

Shortfall |

% shortfall |

Actual |

Shortfall |

% shortfall |

| 2009/10 |

1,360,125 |

828,896 |

61% |

53,794 |

1,510 |

3% |

1,320,454 |

60,704 |

5% |

| 2010/11 |

1,526,571 |

406,021 |

27% |

61,660 |

2,961 |

5% |

1,271,741 |

- |

0% |

| 2011/12 |

1,580,313 |

205,400 |

13% |

72,324 |

11,747 |

16% |

1,221,371 |

1,091,519 |

89% |

| 2012/13 |

2,129,102 |

402,882 |

19% |

73,018 |

16,878 |

23% |

1,235,194 |

11,429 |

1% |

| 2013/14 |

8,497,417 |

959,119 |

11% |

327,942 |

3,286 |

1% |

6,270,605 |

- |

0% |

| Total |

15,093,528 |

2,802,318 |

19% |

588,738 |

36,383 |

6% |

11,319,365 |

1,163,652 |

10% |

Table 3: Total output increases needed each financial year to make the inefficient hospitals efficient during FY 200/10 to FY 2014/14.

Overall, over the five year period, to become efficient, the technically inefficient hospitals would have needed to increase the outpatient department visits by a total of 2,802,318 visits (19%), deliveries by 36,383 (6%) and inpatient days by 1,163,652 (10%).

Productivity changes

For the calculation of the Malmquist Total Factor Productivity Index, the FY 2010/2011 was taken as the technology reference year (t) in order to compute the changes in hospital productivity over time.

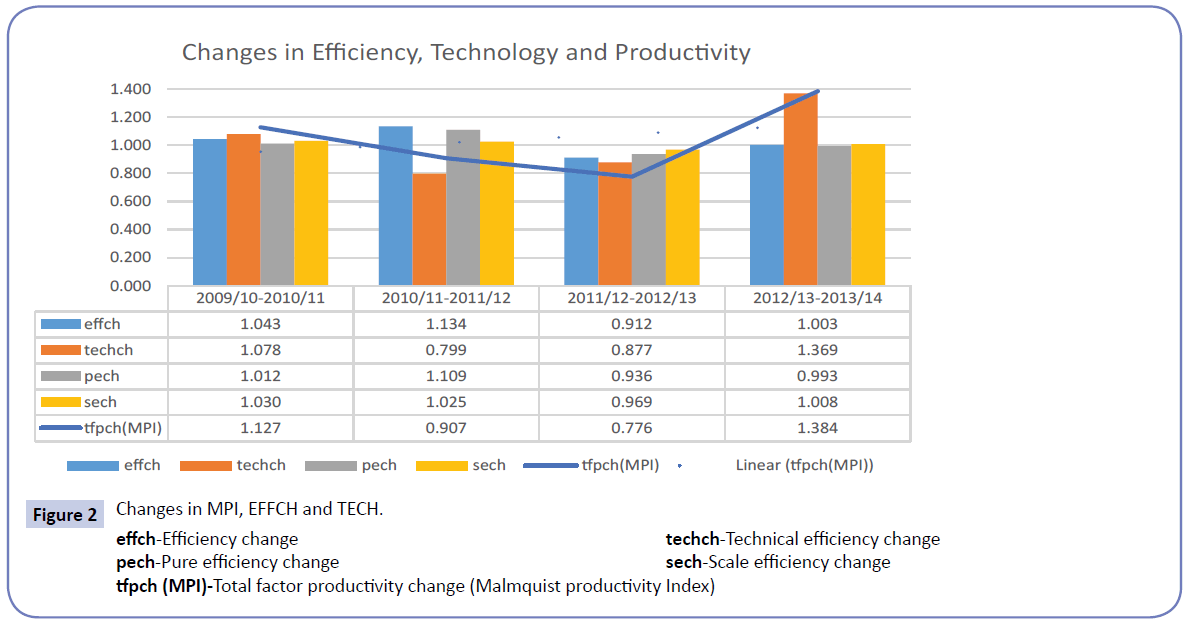

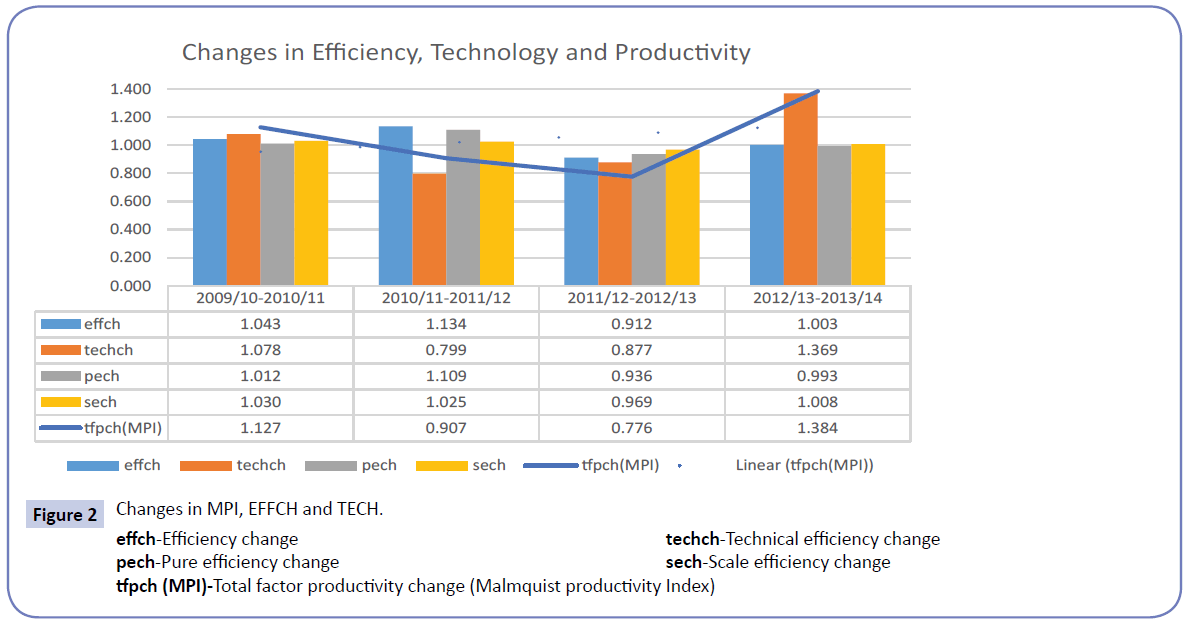

Figure 2 presents the Malmquist index annual geometric means for the five FYs considered in our study.

Figure 2: Changes in MPI, EFFCH and TECH.

effch-Efficiency change techch-Technical efficiency change

pech-Pure efficiency change sech-Scale efficiency change

tfpch (MPI)-Total factor productivity change (Malmquist productivity Index)

Overall, over the five year period, the hospitals experienced growth in productivity from an average MTFP index score of 1.127 between FY 2009/10 and FY 2010/11 (beginning period of our study) to an average MTFP index score of 1.384 between FY 2012/13 and FY 2013/14 (final period of our study). However, between these two time periods, there was a decline in productivity with the average MTFP index score declining from 1.127 between FY 2009/10 and 2010/11 to 0.907 between FY 2010/11 and declining further to 0.776 between FY 2011/12 and FY 2012/13 before increasing to 1.384 between FY 2012/13 and FY 2013/14.

The average MTFP index score for the sample hospitals during the study period was 1.049, meaning that overall, the hospitals experienced improvements in performance during the study period, increasing their productivity on average by about 5%. The highest average MTFP index score of 1.384 was registered between FY 2009/10 and FY 2010/11 while the lowest average MTFP index score of 0.776 was registered between FY 2011/12 and FY 2012/13.

The proportion of hospitals registering productivity growth between FYs varied. For example, 54% of hospitals had a MTFP index of more than 1 between FY 2009/10 to 2010/11 implying that they experienced productivity growth. This proportion sharply declined to 23% between FY 2010/11 to 2011/12 and registered a further decline to 8% between 2011/12 and 2012/2013 FY. Between FY 2012/13 and 2013 and 14, all the hospitals (100%) experienced productivity growth, with one hospital more than doubling its productivity (MPI score of 2.321). The observed across the board growth in productivity between FY 2012/13 and 2013 and 14 can be attributed to the fact that most of the hospitals (92%) had experienced productivity decline in the previous period and were now recovering.

Decomposition of productivity growth into technological and efficiency change

Generally, over the five year study period, changes in the MTFP index score were driven largely by technological changes rather than changes in efficiency. As can be seen from Fig 2, changes in the MTFP index mirror changes in technology change, except for the period between FY 2011/12 and 2012/13. In this time period, in tandem with a decline in efficiency score, the MTFP index declined despite an increase in the technology score, implying that the change in productivity was driven by efficiency change. The increase in productivity between the 2012/2013 and 2013/2014 FY was driven by increases in both technology and efficiency scores.

Technological changes

Between 2009/10-2010/11 FY, 23% of hospitals registered technical change (TECH) of less than one, indicating a decline in technical innovation. This proportion increased to 85% between FY 2010/11 and 2011/12 before declining to 77% between the 2011/12 and 2012/13 FY. Between the 2012/13 and 2013/14 FY, all the hospitals registered a technical change score of greater than one indicating technological growth or progress between these time periods.

The overall average technology change score over the five year study period was 1. 031. The hospitals registered technological improvements in the periods between FY 2009/10 and 2010/11 and between FY 2012/13 and 2013/14 as indicated by a technological change score of greater than 1. In the rest of the study periods, the hospitals experienced technological declines as indicated by a technological change score of less than 1.

Efficiency changes

The overall average efficiency change score over the five year study period was 1.023. Apart from the period between FY 2011/12 and 2012/13 when the hospitals overall on average registered a sharp decline in efficiency change (efficiency change score less than 1), the hospitals overall on average registered efficiency improvements in the other time periods (efficiency change scores greater than 1). However, the improvements were not sufficient to overturn the sharp decline in efficiency changes; thus over the five year period, there was a general decline in efficiency change. In line with the efficiency decline, there was a general decline in pure efficiency and scale efficiency change over the five year period.

The figure for efficiency change may be obtained while assuming CRS, but in reality hospitals could face scale inefficiencies due to DRS or IRS. Using the VRS assumption, we decompose the efficiency change index into pure efficiency change and scale efficiency change.

Pure efficiency changes

The pure efficiency change (PECH) measures changes in proximity of hospitals to the frontier, devoid of scale effects.

Over the five year study period, the average overall pure efficiency change score for the sampled hospitals was 1.012. This means that over the study period, overall, there was a 1% increase in hospital efficiency.

The proportion of hospitals registering a pure technical efficiency increase (pure efficiency change score of greater than one) varied over the time period. The proportion increased from 31% in between 2009/10-2010/11 FY to 38% between the 2010/11 to 2011/12 FYs. The proportion then declined to 15% between the 2011/12 to 2012/13 FY before increasing again to 23% between the 2012/13 and 2013/14 FY.

The proportion of hospitals registering no change in pure technical efficiency increase (pure efficiency change score of one) declined from 38% in between 2009/10-2010/11 FY to 31% between the 2010/11 to 2011/12 FYs. The proportion then increased to 38 % between the 2011/12 to 2012/13 FY before increasing again to 46% between the 2012/13 and 2013/14 FY.

Scale efficiency change

Over the five year study period, the overall average SECH score for the entire sample was 1.008, indicating that the scale of production on average increased efficiency change by about 1 percent.

The proportion of hospitals in which scale of production contributed positively to productivity change (scale efficiency score greater than 1) declined from 54% in between 2009/10- 2010/11 FY to 38% between the 2010/11 to 2011/12 FYs. The proportion then remained constant at 46% between the 2011/12 to 2012/13 FY and between the 2012/13 and 2013/14 FY.

Scale of production did not contribute to productivity growth (scale efficiency change score of 1) in 15% of the sampled hospitals between 2009/10-2010/11 FY. Between the FY 2010/11 and 2011/12, none of the hospitals registered a scale efficiency change score of one indicating that during that time period, scale of production contributed either positively or negatively towards efficiency change. The proportion of hospitals in which the hospital’s scale of production did not contribute towards productivity growth remained constant at 8 % between the 2011/12 to 2012/13 FY and between the 2012/13 and 2013/14 FY.

Generally, over the five year period, efficiency change (increase or decrease) in each individual FY was attributed to an increase or decrease in both pure efficiency and scale efficiency, except in the 2002/13 to 2013/14 period when efficiency increased in tandem with an increase in pure scale efficiency despite a decline in pure technical efficiency.

Significance of changes in productivity, efficiency and technology over time

To determine if the productivity and efficiency changes observed between the different time periods were significant, we conducted an equality of means test using the t-statistic. The results are shown in Table 4.

| |

EFCH |

TECHCH |

MPI |

| Time period |

t |

Sig. (2-tailed) |

Correlation |

Sig. |

t |

Sig. (2-tailed) |

Correlation |

Sig. |

t |

Sig. (2-tailed) |

Correlation |

Sig. |

| FY09/10-10/11 Vs FY10/11-11/12 |

-0.6234 |

0.5447 |

-0.5170 |

0.0700 |

5.4981 |

0.0000 |

0.0720 |

0.8150 |

1.4194 |

0.1812 |

-0.5400 |

0.0570 |

| FY 10/11-11/12 Vs FY11/12-12/13 |

1.4434 |

0.1745 |

-0.6730 |

0.0120 |

-0.7394 |

0.4740 |

-0.7480 |

0.0030 |

0.8986 |

0.3865 |

-0.5720 |

0.0410 |

| FY11/12-12/13 Vs FY12/13-13/14 |

-1.2825 |

0.2239 |

0.0390 |

0.9000 |

-4.8060 |

0.0000 |

-0.7450 |

0.0030 |

-4.6924 |

0.0005 |

-0.3980 |

0.1780 |

| FY 09/10-10/11 Vs FY12/13-13/14 |

0.9130 |

0.3792 |

0.6420 |

0.0180 |

-7.3842 |

0.0000 |

0.3680 |

0.2160 |

-3.5225 |

0.0042 |

0.6350 |

0.0200 |

EFCH-Efficiency change;

TECHCH-Technical Efficiency Change;

MPI-Malmquist Productivity Index/

Table 4: Test of equality of means of productivity and efficiency changes between financial years.

The equality of means test of MPI across the time periods indicates significant changes in MPI scores between FY 11/12-12/13 and FY 12/13-13/14 (P < 0.01) and thereby reject the null hypothesis of no changes in productivity over the time periods. It is important to note that during the time periods with significant changes in productivity, there were significant changes in technological change indicating this as the main driver of productivity.

The results show that across the time periods, the changes in efficiency are not significant (P > 0.01). This confirms the earlier result that the productivity changes observed in the hospitals over the time period were not due to efficiency changes. In contrast, the results show that the technological changes between the various time periods were significant (P < 0.01) except for the period between FY 10/11-11/12 and FY 11/12-12/13. This confirms the earlier result that the productivity changes in the hospitals over the time period were mainly due to technological changes.

Discussion

The measurement of productivity in order to evaluate performance of hospitals within health systems has been widely applied [13-19,20-23] and is thus accepted by health economists as a standard tool for performance tracking and evaluation. Such performance tracking and evaluation is important to both policy makers and health administrators. Comparison among hospitals can for example indicate how the various hospitals perform relative to their peers and measurement of productivity over time can help indicate whether productivity is growing or declining enabling corrective action to be taken.

Accordingly, the purpose of this paper was to assess productivity growth of regional referral hospitals in Uganda over a five year period taking into account changes in both efficiency and technology. We use Malmquist index to measure productivity changes over the study period.

A key finding from our study is that the productivity of public sector regional referral hospitals in Uganda grew marginally over the five financial years covering the period 2009/10 to 2012/14. The observed average MTFP index score of 1.049 for the sample hospitals during the study period indicates that the hospitals on average increased their productivity by about 5% between each considered period. However growth in productivity varied between time periods; the initial three time periods of the study registered a gradual decline before a sharp increase in productivity. This sharp increase accounts for the overall increase in productivity observed.

Our results further indicate that growth in productivity was especially due to technological progress rather than efficiency improvement. It is important to note that during the time periods with significant changes in productivity, there were significant changes in technological change (P < 0.01) while the changes in efficiency across all the time periods were not significant (P > 0.01) confirming technological change as the main driver of productivity. There is thus, additional scope for further improving productivity by improving hospital efficiency

In a broad economic sense, technological change (innovation), the main driver of productivity growth is related to investment, i.e., a change in capital stock. Capital accumulation occurs when organizations invest in more or better machinery, equipment, and structures that make it possible for them to produce more output. This shifts the efficiency frontier towards the optimum. Our results show that during the study period, the hospitals in our sample experienced technological progress which allowed greater output. Theoretically, this progress may have resulted from the application of improved health technologies to health service production processes; increases in health workforce, its motivation or skill; or from improvements in health services organization. The data shows that overall during the five year period, there was growth in the number of total staff. This suggests increases in the health workforce as the key technological change driving the observed productivity increase.

Overall, we observe three combinations of technical efficiency change and technological change over the five year period based on the average technical efficiency and technological change scores:

First, there are two time periods in which improvements in technical efficiency coexist with improvements in technological change. These are the periods with improvements registered in technical efficiency, denoting upgraded organizational factors associated with the use of inputs and outputs, as well as the relationship between inputs and outputs. This specifically happens in the period between 2009/10 and 2010/11 FY and between 2012/13 and 2013/14 FY. These are the time periods when the hospitals in our study can be considered to have been most productive overall. Unsurprisingly the highest average MTFP index score of 1.384 was registered between FY 2009/10.

Secondly, there is a period when improvements in technical efficiency co-exist with deterioration in the technology score. This happens in the period between FY 2010/11 and FY 2011/12. During this time period, overall, the hospitals experience upgraded organizational factors, but without the innovation inherent in investment in new technology, which would provide leverage for the organizational factors. During this period, the hospitals would have needed to acquire new technology and the necessary commensurate skill upgrades in order to improve their performance.

Thirdly, there is a time period when deteriorating technical efficiency co-exists with deteriorating technology; this happens during the 2011/12 to 2012/13 FY period. This is the time period when the hospitals in our study may be considered to overall have been less productive. Unsurprisingly, the lowest average MTFP index score of 0.776 was registered between FY 2011/12 and FY 2012/13.

The productivity growth (MTFP) average score of 1.049 found in the present study is comparable to findings in other countries in which hospitals also had an average score greater than one signifying productivity growth: Angola municipal hospitals had 1.045 [13]; Brazilian Federal University hospitals had 1.209 [22]; China coastal hospitals had 1.1307 [20]; India district hospitals had 1.2358 [32]; Ireland regional hospitals had 1.028 [18]; and Portugal hospitals had 1.042 [17]. However, the sources of growth in the various countries are quite different. While hospitals in the Ukraine and in South Africa experienced technological regression combined with improvement in efficiency, the productivity growth of Guangdong hospitals encountered improvement in technology and efficiency over the studied period.

In contrast, studies in other countries have found average MTFP scores of less than one signifying declines in productivity: Botswana district hospitals had a score of 0.985 [14], Montreal Canada of 0.92 [16], Greece of 0.986-0.988 [20,21], South Africa of 0.879 [15] and Taiwan of 0.7877 [23].

The results of our analyses have interesting policy implications for development of the

Health system in Uganda. We however wish to stress here that findings of the study are critically based on the choice of inputs and outputs, and, hence, the policy implications discussed below should be considered within this perspective. Our results indicate that, overall, over the five year period, to become efficient, the technically inefficient hospital would have needed to increase the outpatient department visits by a total of 2,802,318 visits (19%), deliveries by 36,383 (6%) and inpatient days by 1,163,652 (10%) without increasing any of the inputs. This reflects a lost opportunity for the hospitals to contribute towards improvements in health services access, and hence, health status of Ugandans. Thus, there is room for MOH policy-makers in Uganda to improve hospital productivity and efficiency by improving access and utilization of under-utilized services.

One way of improving utilization is through increasing financial access to hospital services. Currently, hospital services in public sector hospitals are funded through general taxes and are supposed to be free of charge to clients. However, due to limited government funding, the hospitals do not always have the required inputs to ensure service delivery thus reducing efficiency. Currently, Uganda does not have a national social health insurance scheme although there are ongoing discussions to introduce one. There is need to expedite introduction of social health insurance in order to increase financial access by clients and to increase available resource envelope for hospital inputs and contribute to increased hospital efficiency and productivity.

Study limitations and suggestions for future research

The performance of organizations like hospitals can be addressed from various angles. The work by Culter and Berndt [32] provides an in-depth discussion of health care output and productivity. Besides the inputs-outputs approach adopted in this study, hospital performance can be addressed from a cost perspective by considering the cost of inputs per unit of output. Cost data for inputs were not available in the present study, so an input–output relationship approach was adopted in analyzing the hospitals' performance. The applied DEA framework of relating inputs and outputs addresses efficiency issues from production view point; issues associated with allocative efficiency were left out owing to the lack of cost information. Given the pros and cons of the DEA approach, verifying efficiency and productivity change of Uganda public sector regional referral hospitals using stochastic methods is one direction for further research.

We were not able to obtain information about any changes in case-mix or severity of cases handled by the hospitals over the study period or about changes in patient outcome quality. DEA emphasizes the strict forward inputs and outputs relationship in computing the efficiency scores or Malmquist indices, but the lack of adjustment for case-mix or outcome quality implies that the results of the present study must be interpreted with caution. The empirical analyses can only serve as an example in understanding the changes in performance of regional referral hospitals in Uganda. Future research should consider integrating quality of care indicators to further characterize hospitals outputs. Incorporating indicators of changes in case mix of patients attended by the hospitals over the study period would also be an interesting subject for future research

The study focused only on public sector regional referral hospitals and did not include private not for profit hospitals of similar capacity that are usually categorized together with the public sector regional referral hospitals. This was mainly because of lack of comprehensive and consistent data from the PNFP hospitals in the MOH AHSPR used as the data sources. Thus the results generated are strictly applicable only to the public sector referral hospitals. Due to this limitation, it was not possible to for example investigate the effect of hospital ownership on productivity and efficiency changes over the study period.

The study only used inputs and outputs for which the most data was available for all hospitals during the study period. The data was for the large part complete though there were some gaps particularly in the 2011/12 FY. Missing data on inputs was filled in by using values from the previous period, whereas missing data on outputs was filled in by using the average for all hospitals during the reference period. Although this was the best way to do it, the figures used may not have reflected true reality. Additionally, some other relevant inputs such as material supplies (pharmaceutical and non-pharmaceutical supplies) and outputs, like major operations conducted, lab tests conducted etc. were left out in the estimation owing to a lack of data. Such omissions could mean that the efficiency estimates presented here may be slightly biased.

Combining longitudinal studies like ours with an investigation of the environmental context over the study period should provide insightful information about the effects of health care environments, such as political and economic factors, on productivity. Besides increase in the workforce as observed in our study, technological progress depends on a number of factors, including: the availability of appropriate health technology, availability of funds to finance acquisition of the new technology, availability of training facilities and opportunities to equip the staff with the required skills to take advantage of the new technology in addition to institutional changes that may lead to better team work and communication between health policy makers, hospital managers and the hospital staff. It is not clear from our study which of these additional factors were at play hence the need for further study.

Conclusions

This paper analyses productivity and efficiency changes in public sector regional referral hospitals in Uganda over a five year period from the 2009/10 Financial year to the 2013/14 Financial year. Using DEA methods we calculated the Malmquist Total Factor Productivity Index and decomposed it into levels of average efficiency change and average technological change. Controlling for VRS technology, we then decomposed the efficiency change further into pure efficiency and scale efficiency change.

The results show that the average total factor productivity index of the hospital in our sample grew over the five year period, and that this growth was significant, especially between FY 11/12- 12/13 and FY 12/13-13/14 (P<0.01). The observed average MTFP index score of 1.049 for the sample hospitals during the study period indicates that the hospitals on average increased their productivity by about 5% between each considered period. The results further indicate that growth in productivity was especially due to technological progress rather than efficiency improvement. Changes in efficiency across all the time periods were not significant (P > 0.01)

Overall, over the five year period, the inefficient hospitals, taken together, would need to increase the outpatient department visits by a total of 2,802,318 visits (19%), deliveries by 36,383 (6%) and inpatient days by 1,163,652 (10%) without increasing any of the inputs in order to become efficient. There is thus scope for providing more child and maternal health services to additional persons by using the existing health system inputs more efficiently, i.e., without waste.

The applied DEA framework of relating inputs and outputs addresses efficiency issues from production view point; issues associated with allocative efficiency were left out owing to the lack of cost information. Given the pros and cons of the DEA approach, verifying efficiency and productivity change of Uganda public sector regional referral hospitals using stochastic methods is one direction for further research. Incorporating indicators of changes in case mix and outcomes of patients attended by the hospitals over the study period would also be an interesting subject for future research. Finally, combining longitudinal studies like ours with an investigation of the environmental context over the study period should provide insightful information about the effects of health care environments, such as political and economic factors, on productivity.

8934

References

- (2000) WHO: World Health Report 2000: Health Systems: Improving Performance - Strengthened Health Systems Save More Lives” Geneva: WHO;

- (2004) Government of Uganda. National Hospital policy. Kampala: Ministry of Health.

- (2013) Government of Uganda: National Health Accounts FY 2008/09 and FY 2009/10. Kampala: Ministry of Health.

- Hensher M (2001) “Financing the Health System through Efficiency Gains.” Background paper prepared for Working Group 2 of the Commission on Macroeconomics and Health. Geneva: World Health Organization.

- (2009) Government of Uganda: National Health policy-Reducing Poverty through improving people’s health. Kampala: Ministry of Health.

- (2010) Government of Uganda: Health sector strategic and investment plan- Promoting people’s health to enhance socio-economic development 2010/11-2014/15. Kampala: Ministry of Health.

- Zere E, Mbeeli T, Shangula K, Mandlhate C, Mutirua K, et al. (2006) Technical efficiency of district hospitals: evidence from Namibia using data envelopment analysis. Cost EffResourAlloc 4:5.

- Marschall P, Flessa S (2009) Assessing the efficiency of rural health centres inBurkina Faso: an application of Data Envelopment Analysis. J PublicHealth 17:87-95.

- Sebastian MS, Lemma H (2010) Efficiency of the health extension programme in Tigray, Ethiopia: a data envelopment analysis. BMC International Health and Human Rights 10:16.

- Ichoku H, Fonta WM, Onwujekwe OE, Kirigia JM (2011) Evaluating the technical efficiency of hospitals in South Eastern Nigeria. European Journal of Business and Management 3:24-37.

- Kirigia,AsbuA (2013) Technical and scale efficiency of public community hospitals in Eritrea: an exploratory study. Health Economics Review 3:6.

- Yawe BL, Kavuma SN (2008) Technical efficiency in the presence of desirable and undesirable outputs: a case study of selected district referral hospitals in Uganda. Health Policy and Development 6:37-53.

- Kirigia JM, Emrouznejad A, Cassoma B, Asbu EZ, Barry S (2008) A performance assessment method for hospitals: the case of Municipal Hospitals in Angola. J Med Syst 32:509-519.

- Tlotlego N, Nonvignon J, Sambo LG, Asbu EZ, Kirigia JM (2010) Assessment of productivity of hospitals in Botswana: a DEA application. Int Arch Med 3:1-14.

- Zere E, Mcintyre D, Addison T (2005) Hospital efficiency and productivity in three provinces of South Africa. South Afr J Econ 69: 336-358.

- Ouellete P, Vierstraete V (2004) Technological change and efficiency in the presence of quasi-fixed inputs: a DEA application to the hospital sector. European Journal of Operational Research 154:755-763.

- Barros CP, Menezes AG, Peypoch N, Solonandrasana B, Vieira JV (2007) An analysis of hospital efficiency and productivity growth using the Luenberger indicator. Health Care Management Science 11:373-381.

- Gannon B (2008) Total factor productivity growth of hospitals in Ireland: a nonparametric approach. Applied Economics Letters 15:131-135.

- Dash U (2009) Evaluating the comparative performance of District Head Quarters Hospitals, 2002-07: a non-parametric Malmquist approach Mumbai: Indra Gandhi Institute of Development Research (IGIDR).

- Ng YC (2008) The Productive Efficiency of the Health Care Sector of China. The Review of Regional Studies 38:381-393.

- Karagiannis R, Velentzas K (2012) Productivity and quality changes in Greek public hospitals. International Journal of Operations Research 12:69-81

- Lobo MSC, Ozcan YA, Silva ACM, Lins MPE, Fiszman R (2010) Financing reform and productivity change in Brazilian teaching hospitals: Malmquist approach. Central European Journal of Operations Research 18:141-152.

- Chang SH, Hsiao HC, Huang LH, Chang H (2011) Taiwan quality indicator project and hospital productivity growth. Omega 39:14-22.

- Farrell MJ (1957)The measurement of productive efficiency. Journal of the Royal Statistical Society 120: 253-281.

- Emrouznejad A, Parker BR, Tavares G (2008) Evaluation of research in efficiency and productivity: A survey and analysis of the first 30 years of scholarly literature in DEA. Socio-Economic Planning Sciences 42:151-157.

- O’Neil L, Rauner M, Heidenberger K, Kraus M (2008) A cross-national comparison and taxonomy of DEA-based hospital efficiency studies. Socio-Economic Planning Sciences 42:158-189.

- Charnes A, Cooper WW, Rhodes E (1978) Measuring the efficiency of decision making units. European Journal of Operational Research 2:429-444.

- Grifell-Tatje E, Lovell CAK (1997)The sources of change in Spanish banking. European Journal of Operational Research 98:364-380.

- Coelli TJ, Rao DSP, O’Donnell CJ, Battese GE (1998)An Introduction to Productivity and Efficiency Analysis New York: Springer Science.

- Fare R, Grosskopf S, Lovell CAK (1994) Production frontiers Cambridge: Cambridge University Press.

- Kirikal L, Sorg M, Vensel V (2004) Estonian banking sector performance analysis using Malmquist indexes and DuPoint financial ratio analysis. International Business and Economic Research Journal 3:21-36.

- Medical Care Output and Productivity(2001). In: Cutler, David M,Berndt ER (eds.)Studies in Income and Wealth, Chicago: The University of Chicago Press62.

(1)

(1) (2)

(2)